本文是全部离线安装,也就是 UPI (User Provisioned Infrastructure) 模式安装,假设机器只能配置静态ip不能有网络配置权限和配置 dhcp 和 pxe。机器可以是物理机和虚拟机。

前言介绍 ocp介绍 openshift 分为社区版本 okd 和企业版本 ocp(openshift container platform),okd 的更新很慢,ocp 个人也是可以安装的,不购买 license 则不会享受 redhat 的官方支持。

openshift 不像其他的 dashboard 诸如 rancher,k3s 之类的,它自己实现了 cs 的三个组件,也给 k8s 贡献了 rbac 和 ingress 的代码。它的节点系统是使用 Red Hat Enterprise Linux CoreOS (RHCOS),这是一款面向容器的操作系统,结合了 CoreOS 和 Red Hat Atomic Host 操作系统的一些最佳特性和功能。

RHCOS 是专门为从 OpenShift Container Platform 运行容器化应用程序而设计的,能够与新工具配合,提供快速安装、基于 Operator 的管理和简化的升级,ocp 里 master节点必须是 rhcos 系统,而 worker 节点除了 rhcos 以外还可以选择 rhel。并且集群里有大量的 operator,通过使用 k8s 的声明式 yaml 减少了对系统和集群底层的关注度,甚至集群升级也是通过 cluster-version-operator 完成。

ocp安装流程 先看这个官方的图,从图来讲流程

它的安装是先在一台机器(bastion)上准备 pxe 和相关的安装集群描述信息需要的文件(Ignition)以及 dns,负载均衡,然后引导主机(Bootstrap)通过 dhcp 或者 人为挂载 rhcos 的 iso 在安装界面手写 boot cmdline 从 bastion 指定获取 bootstrap.ign和 os.raw.gz 文件完成安装, 随后 master 节点启动会获取master.ign文件并且从 bootstrap 节点获取 machine-config 信息,随后 node 同理。

在安装过程中 bootstrap 的 ign文件里证书是24小时过期的,因为官方这种涉及理念是 bootstrap 作用于引导集群的,安装完后会将控制平面移交到 master上,所以我们要配置负载均衡(以及代理后续集群里的 ingress controller)

先在一台机器(bastion)上准备pxe和相关的安装集群描述信息需要的文件(Ignition)以及 dns,负载均衡,也就是图里的 Cluster Installer

bastion上手写一个集群安装的 yaml ,然后使用 oc 命令把 yaml 转换成集群部署清单和 Ignition 文件在引导主机启动前准备好 DNS,负载均衡,镜像仓库

引导主机(Bootstrap)通过 dhcp 或者挂载 rhcos 的 iso 启动后在安装界面手写boot cmdline(包含网络配置,nameserver,install_dev,image_url,ignition_url),安装完系统后重启后,bootstrap 机器会执行 bootkube.sh 脚本,内部是 crio 和 podman 启动容器和 pod 来启动控制平面,并等待 master 加入

Master 节点如果像我没有dhcp就手动配置boot cmdline 安装后会从引导主机远程获取资源并完成引导,会作为 node 注册。bootstrap 节点 watch 到 node的注册后,会在集群里部署 operator 执行 一些 install-n-master的 pod,例如引导主机会让 master 节点构建 Etcd 集群。

引导主机使用新的 Etcd 集群启动临时 Kubernetes 控制平面。

临时控制平面在 Master 节点启动生成控制平面。

临时控制平面关闭并将控制权传递给生产控制平面。

引导主机将 OCP 组件注入生成控制平面。

整个过程主要是 bootstrap 上的 bootkube.sh 执行,最后执行完后会在集群里添加一个 -n kube-system configmap/bootstrap 作为保存状态。

引导安装过程完成以后,OCP 集群部署完毕。然后集群开始下载并配置日常操作所需的其余组件,包括创建计算节点、通过 Operator 安装其他服务等。

服务器规划 资源规划 官方文档最小配置 信息为:

Machine

Operating System

vCPU

RAM

Storage

Bootstrap

RHCOS

4

16GB

120 GB

Control plane

RHCOS

4

16 GB

120 GB

Compute

RHCOS or RHEL 7.6

2

8 GB

120 GB

这里机器信息是如下,官方很多镜像都是存在quay.io这个仓库下,因为墙的问题会无法拉取,所以实际部署都会部署一个镜像仓库,这里我镜像仓库是使用 quay ,它会使用 443 端口(暂时没找到更改端口的方法),负载均衡会负载 ingress controller 的 http 和 https(443端口)节点,所以镜像仓库单独一个机器,如果你用其他的仓库例如 registry 可以放在负载均衡的节点上。节点的配置和 role 如下关系,因为 bootstrap 用完后可以删除,可以后面用作 worker 节点。单 master 节点参照网上的我部署有问题,后文会说明原因

服务器规划如下:

三个控制平面节点,安装 Etcd、控制平面组件。

一个计算节点,运行实际负载。

一个引导主机,执行安装任务,集群部署完成后当作 worker 使用。

一个基础节点,用于准备上离线资源,同时用来部署 DNS 和负载均衡(负载machine-config、ocp 的 kube-apiserver 和 集群里的 ingress controller)。

一个镜像节点,用来部署私有镜像仓库 Quay,因为官方镜像在 quay.io 上很难拉取。

翻墙节点不在下面列表里,或者有软路由之类的可以在基础节点直接拉镜像更好

Machine and hostName

OS

vCPU

RAM

Storage

IP

FQND

describe

registry

Centos7.8

4

8GB

100 GB

10.225.45.226

registry.openshift4.example.com

镜像仓库

bastion

Centos7.8

8

8GB

100 GB

10.225.45.250

bastion.openshift4.example.com

dns,负载均衡(LB),http文件下载

bootstrap

RHCOS

4

16GB

120 GB

10.225.45.223

bootstrap.openshift4.example.com

也叫引导节点(Bootstrap)

master1

RHCOS

8

16 GB

120 GB

10.225.45.251

master1.openshift4.example.com

也叫控制节点(Control plane)

master2

RHCOS

8

16 GB

120 GB

10.225.45.252

master2.openshift4.example.com

也叫控制节点(Control plane)

master3

RHCOS

8

16 GB

120 GB

10.225.45.222

master3.openshift4.example.com

也叫控制节点(Control plane)

worker1

RHCOS

4

16 GB

120 GB

10.225.45.223

worker1.openshift4.example.com

也叫计算节点(Compute)

这里 253 ip被抢占了,所以 master3 的ip选 222

openshift4 是集群名,example.com是 basedomainocp内部的 kubernetes api、router、etcd通信都是使用域名,所以这里我们也给主机规定下FQDN,所有节点主机名都要采用三级域名格式,如 master1.aa.bb.com。后面会在 dns server里写入记录

防火墙端口 接下来看一下每个节点的端口号分配。

所有节点(计算节点和控制平面)之间需要开放的端口:

协议

端口

作用

ICMP

N/A

测试网络连通性

TCP

9000-9999节点的服务端口,包括 node exporter 使用的 9100-9101 端口和 Cluster Version Operator 使用的 9099 端口

10250-10259Kubernetes 预留的默认端口

10256openshift-sdn

UDP

4789VXLAN 协议或 GENEVE 协议的通信端口

6081VXLAN 协议或 GENEVE 协议的通信端口

9000-9999节点的服务端口,包括 node exporter 使用的 9100-9101 端口

30000-32767Kubernetes NodePort range

控制平面需要向其他节点开放的端口:

协议

端口

作用

TCP

2379-2380Etcd 服务端口

TCP

6443Kubernetes API

除此之外,还要配置两个四层负载均衡器,一个用来暴露集群 API,一个用来暴露 Ingress:

端口

作用

内部

外部

描述

6443引导主机和控制平面使用。在引导主机初始化集群控制平面后,需从负载均衡器中手动删除引导主机

x

x

Kubernetes API server

22623引导主机和控制平面使用。在引导主机初始化集群控制平面后,需从负载均衡器中手动删除引导主机

x

Machine Config server

443Ingress Controller 或 Router 使用

x

x

HTTPS 流量

80Ingress Controller 或 Router 使用

x

x

HTTP 流量

DNS 按照官方文档,使用 UPI 基础架构的 OCP 集群需要以下的 DNS 记录。在每条记录中,<cluster_name> 是集群名称,<base_domain> 是在 install-config.yaml 文件中指定的集群基本域,如下表所示:

组件

DNS记录

描述

Kubernetes API

api.<cluster_name>.<base_domain>.此 DNS 记录必须指向控制平面节点的负载均衡器。此记录必须可由集群外部的客户端和集群中的所有节点解析。

api-int.<cluster_name>.<base_domain>.此 DNS 记录必须指向控制平面节点的负载均衡器。此记录必须可由集群外部的客户端和集群中的所有节点解析。

Routes

*.apps.<cluster_name>.<base_domain>.DNS 通配符记录,指向负载均衡器。这个负载均衡器的后端是 Ingress router 所在的节点,默认是计算节点。此记录必须可由集群外部的客户端和集群中的所有节点解析。

etcd

etcd-<index>.<cluster_name>.<base_domain>.OCP 要求每个 etcd 实例的 DNS 记录指向运行实例的控制平面节点。etcd 实例由 值区分,它们以 0 开头,以 n-1 结束,其中 n 是集群中控制平面节点的数量。集群中的所有节点必须都可以解析此记录。

_etcd-server-ssl._tcp.<cluster_name>.<base_domain>.因为 etcd 使用端口 2380 对外服务,因此需要建立对应每台 etcd 节点的 SRV DNS 记录,优先级 0,权重 10 和端口 2380

镜像准备 因为镜像都是在quay.io上,国内很难拉取下来,所以参考官方文档 Creating a mirror registry for installation in a restricted network 创建个镜像仓库。要求支持 version 2 schema 2 (manifest list) ,我这里选择的是 Quay 3.3。quay 镜像仓库需要部署在另外一台节点,因为它需要用到 443 端口,与后面的负载均衡 https 端口冲突。同时因为镜像是需要翻墙拉取,所以需要自备一台能翻墙的节点处于网络边界上。

本地镜像仓库 - registry 仓库没必要开始搭建,在下文的 oc 同步镜像之前搭建好即可

这里使用 docker-compose 搭建 quay 仓库,自行安装 docker 和 docker-compose 设置好系统和内核参数,如果你也使用容器搭建,可以不用和我一样的操作系统。容器已经能通过 alias 互联,所以没必要的端口不用映射到宿主机上,你也可以使用 podman 或者其他容器工具起一个环境,甚至 docker run 起来这些容器。

设置机器的 hostname

1 hostnamectl set-hostname registry.openshift4.example.com

docker-compose for registry 这里数据都存放在/data/quay/xxx,创建目录

1 2 3 4 5 6 7 8 9 10 mkdir -p /data/quay/lib/mysql \ /data/quay/lib/redis \ /data/quay/config \ /data/quay/storage # 容器权限问题,容器的user都在root组下,但是默认umask 下组没有w权限,所以这里加下 chmod g+w /data/quay/lib/mysql/ \ /data/quay/lib/redis/ \ /data/quay/config \ /data/quay/storage

创建quay仓库的docker-compose.yml文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 cat> /data/quay/docker-compose.yml << EOF version: '3.2' services: quay: #image: quay.io/redhat/quay:v3.3.1 image: registry.aliyuncs.com/quayx/redhat-quay:v3.3.1 container_name: quay restart: always privileged: true sysctls: - net.core.somaxconn=1024 volumes: - /data/quay/config:/conf/stack:Z - /data/quay/storage:/datastorage:Z ports: - 443:8443 command: ["config", "redhat"] depends_on: - mysql - redis networks: quay: aliases: - config logging: driver: json-file options: max-file: '3' max-size: 100m mysql: image: registry.access.redhat.com/rhscl/mysql-57-rhel7 container_name: quay-mysql restart: always privileged: true volumes: - /data/quay/lib/mysql:/var/lib/mysql/data:Z # ports: # - 3306:3306 environment: - MYSQL_ROOT_PASSWORD=redhat - MYSQL_DATABASE=enterpriseregistrydb - MYSQL_USER=quayuser - MYSQL_PASSWORD=redhat networks: quay: aliases: - mysql logging: driver: json-file options: max-file: '3' max-size: 100m redis: image: registry.access.redhat.com/rhscl/redis-32-rhel7 container_name: quay-redis restart: always privileged: true volumes: - /data/quay/lib/redis:/var/lib/redis/data:Z networks: quay: aliases: - redis # ports: # - 6379:6379 depends_on: - mysql logging: driver: json-file options: max-file: '3' max-size: 100m networks: quay: name: quay external: false EOF

数据库 : 主要存放镜像仓库的元数据(非镜像存储)Redis : 存放构建日志和Quay的向导Quay : 作为镜像仓库Clair : 提供镜像扫描功能

注意,其中的镜像 quay.io/redhat/quay:v3.3.1 是无法拉取的,参考 官方链接 获取Red Hat Quay v3 镜像的访问权才可以拉取

1 docker login -u="redhat+quay" -p="O81WSHRSJR14UAZBK54GQHJS0P1V4CLWAJV1X2C4SD7KO59CQ9N3RE12612XU1HR" quay.io

这个镜像我已经同步到阿里云上的镜像仓库上,也方便拉取,另外这个镜像的运行命令 config redhat的 redhat 是 quay 仓库起来后的 web 里用到的密码,这里我们先拉取上面所需要的镜像。

1 2 3 cd /data/quay docker-compose pull docker-compose up -d

后续还会部署的话推荐这里使用 docker 把这三个镜像 docker save -o 成一个tar包方便以后 docker load -i 导入

1 2 3 4 5 cd /data/quay docker save \ registry.access.redhat.com/rhscl/redis-32-rhel7 \ registry.access.redhat.com/rhscl/mysql-57-rhel7 \ registry.aliyuncs.com/quayx/redhat-quay:v3.3.1 | gzip - > quay-img.tar.gz

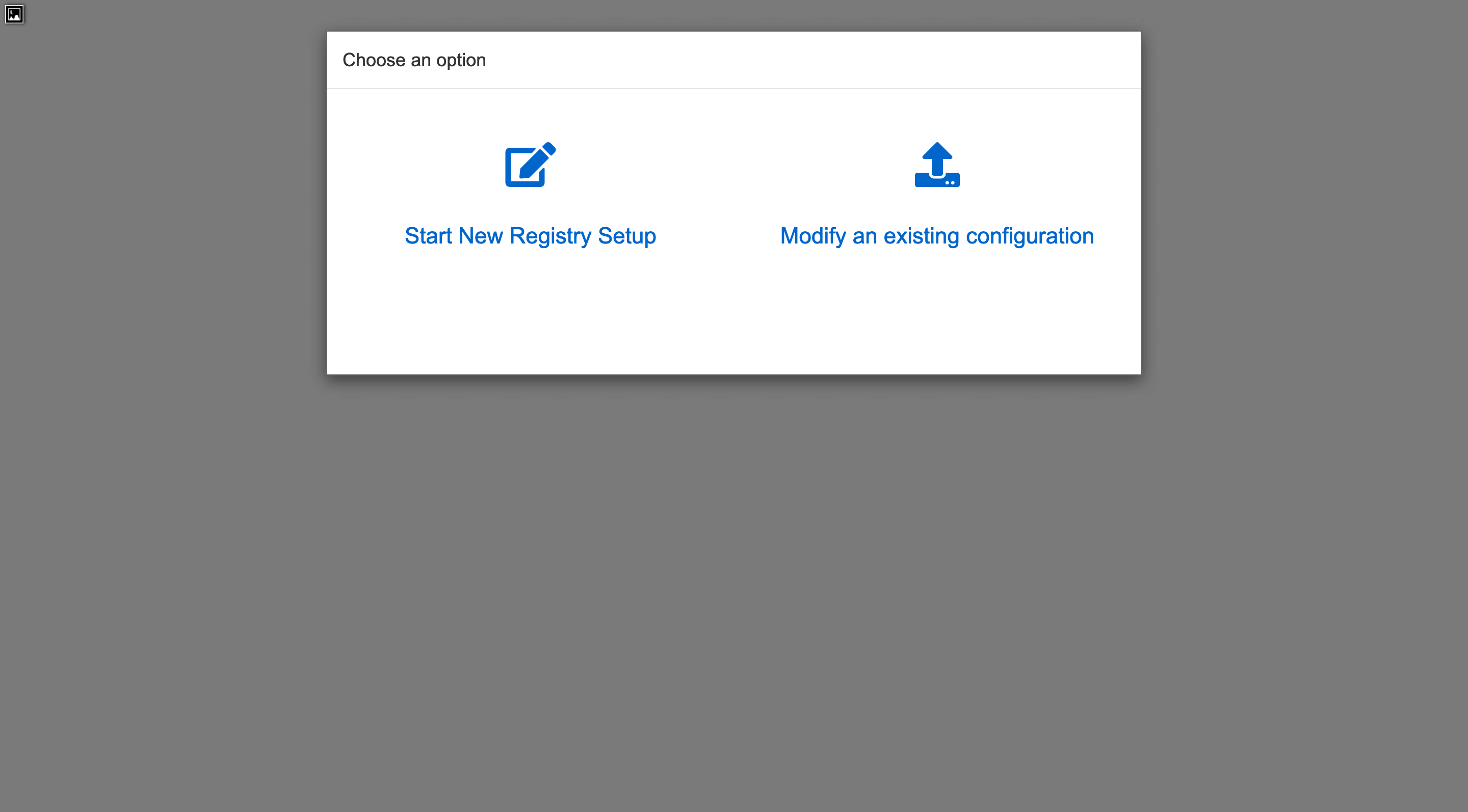

setup for registry 起来后访问https://ip basic auth 信息为quayconfig/redhat,选择Start New Registry Setup

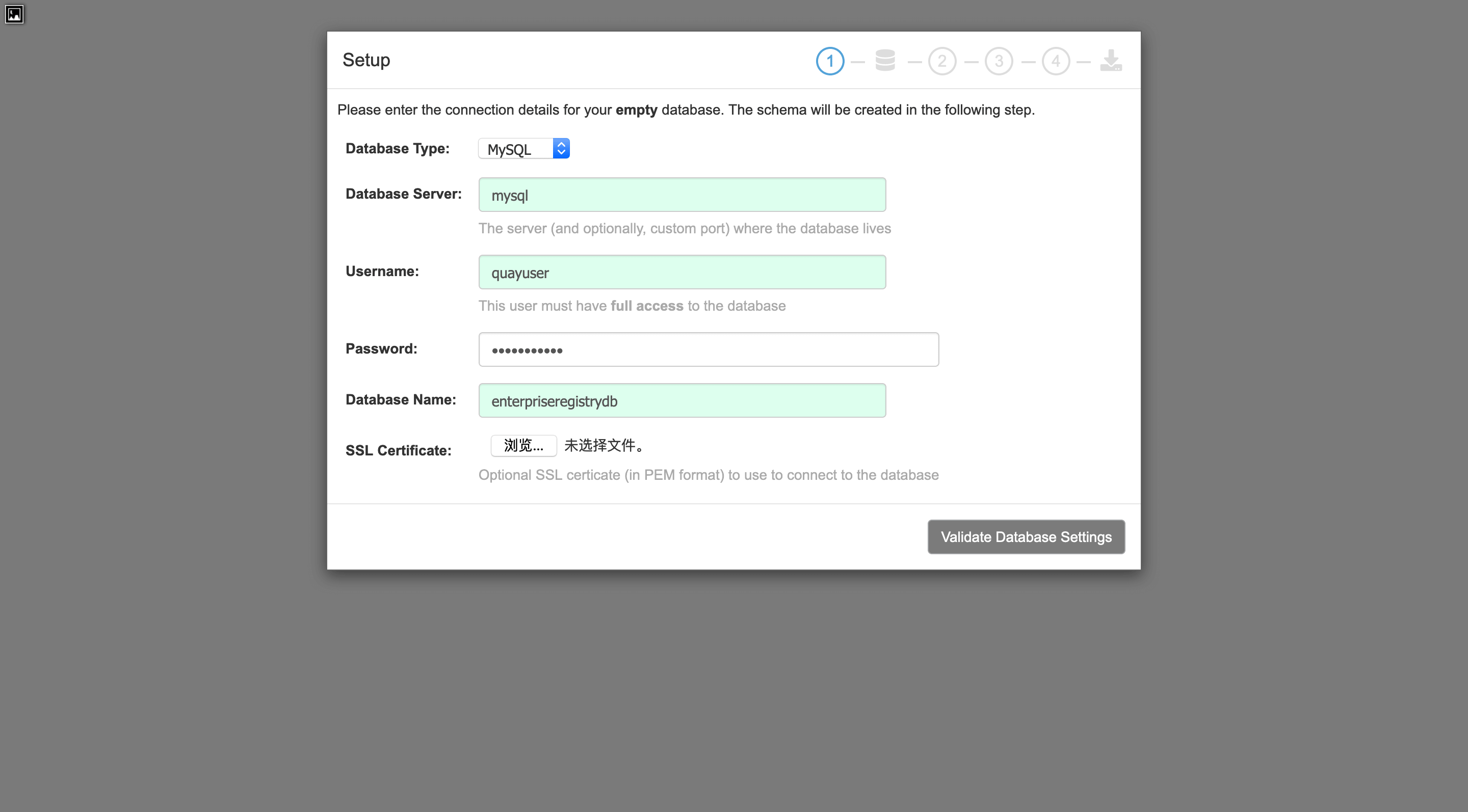

选择新建配置,然后设置数据库:

连接信息按照上面的docker-compose里的环境变量写,ssl certificate先别管。

设置超级管理员,记住密码,然后下一步

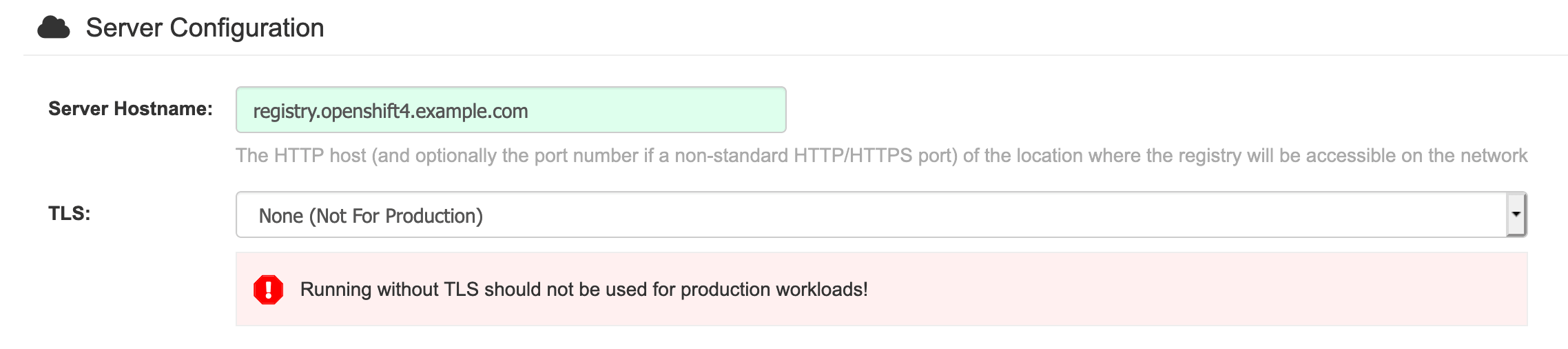

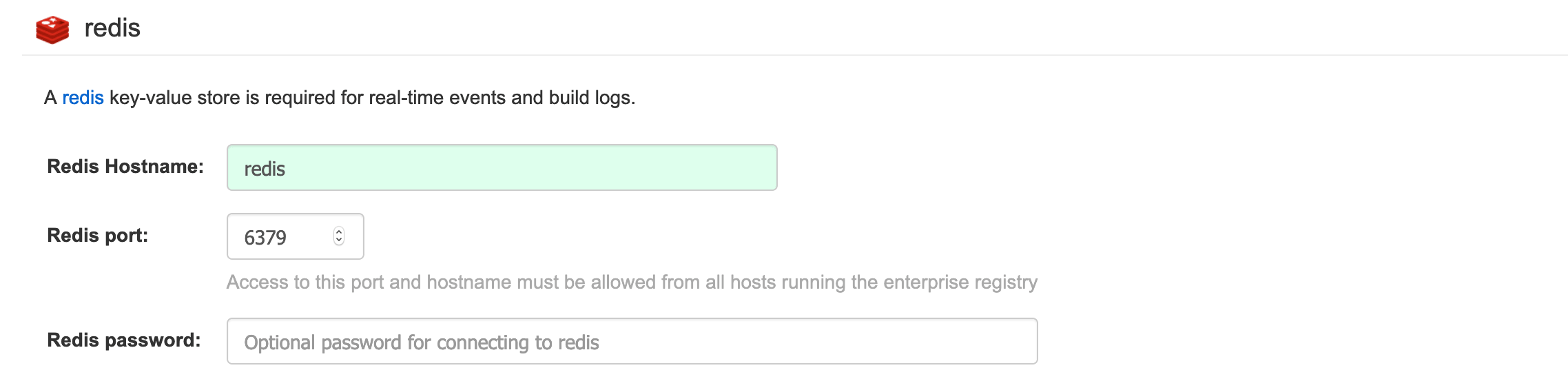

下一步然后页面往下滑动,在Server Configuration段里设置Server Hostname,例如为registry.openshift4.example.com,往下滑动,配置redis信息

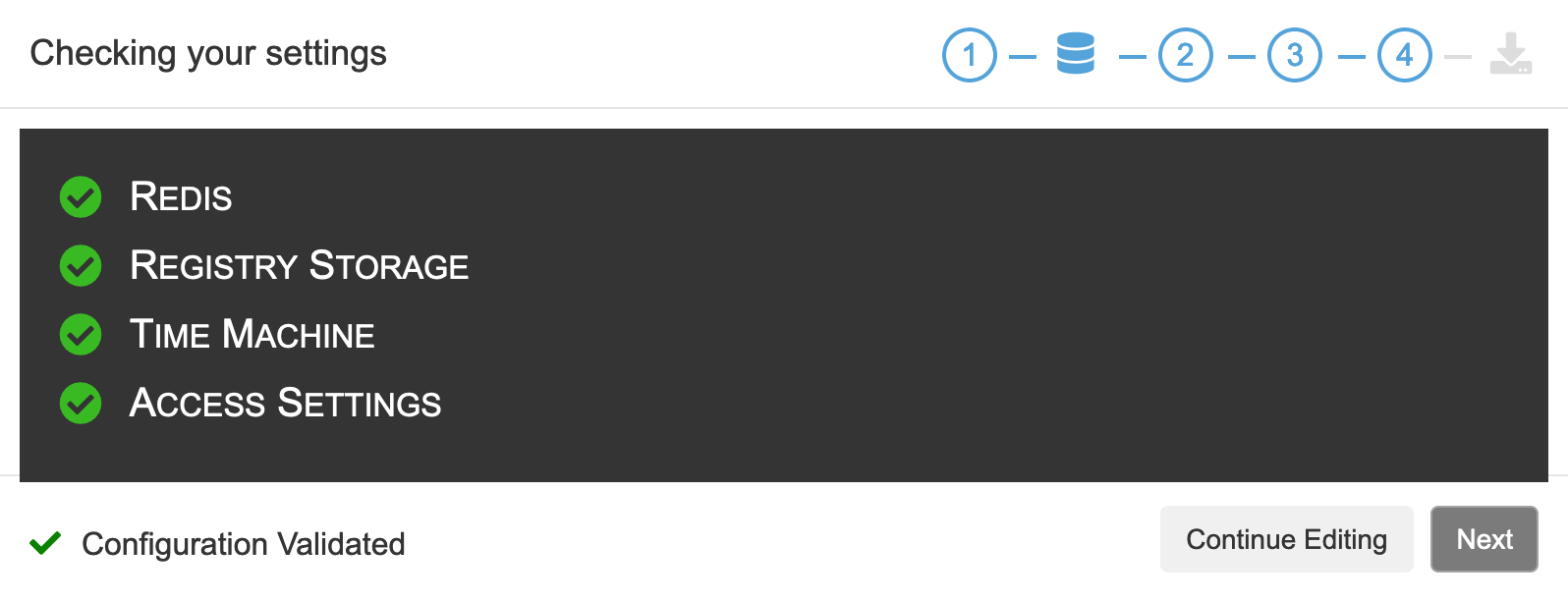

点击左下角的Save,弹出的Checking全绿后点击Next

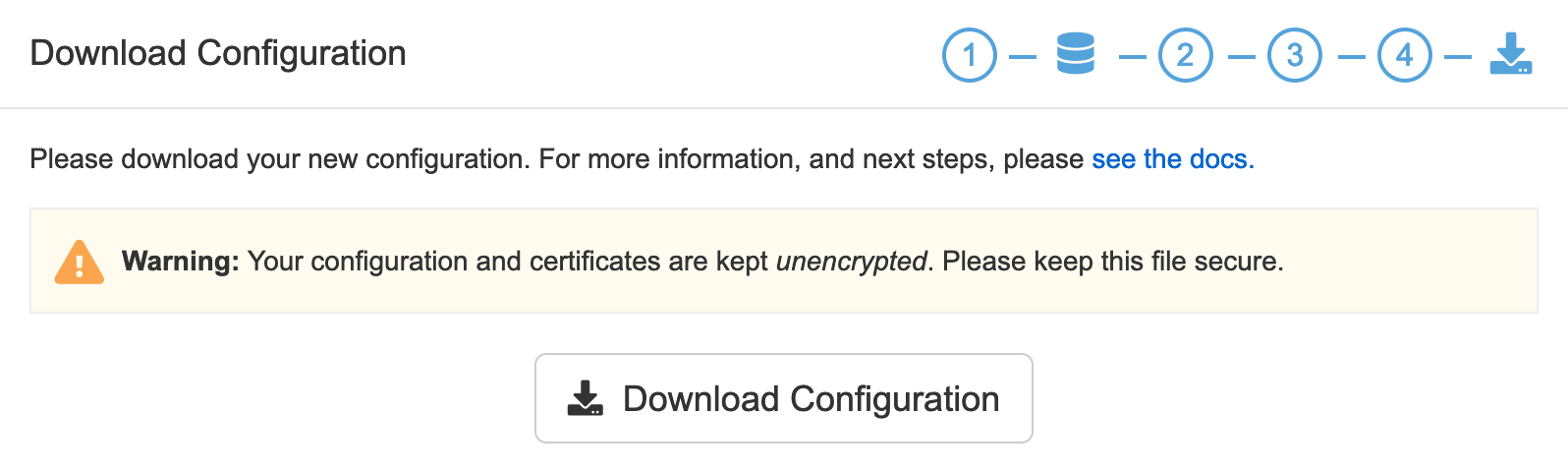

配置检查通过后,就可以保存下载下来:

最后会导出一个 quay-config.tar.gz,将其上传到 Quay 所在的服务器,解压到配置文件目录:

1 2 3 cp quay-config.tar.gz /data/quay/config/ cd /data/quay/config/ tar zxvf quay-config.tar.gz

ssl for registry 接下来为仓库生成域名自签名证书

1 2 3 4 5 6 7 8 9 cd /data/quay/config/ # 生成私钥 openssl genrsa -out ssl.key 1024 # 生成证书,最好使用通配符 openssl req \ -newkey rsa:2048 -nodes -keyout ssl.key \ -x509 -days 36500 -out ssl.cert -subj \ "/C=CN/ST=Wuhan/L=Wuhan/O=WPS/OU=WPS/CN=*.openshift4.example.com"

证书搞定后PREFERRED_URL_SCHEME: http修改成 https

1 sed -ri '/^PREFERRED_URL_SCHEME:/s#\S+$#https#' config.yaml

然后停掉服务,注释掉 command 后再启动,web 打开看看是不是镜像仓库,是的话本机添加下 hosts

1 2 3 4 5 6 cd /data/quay/ docker-compose down sed -ri '/^\s*command: \["config"/s@^@#@' docker-compose.yml docker-compose up -d grep -qw 'registry.openshift4.example.com' /etc/hosts || echo '127.0.0.1 registry.openshift4.example.com' >> /etc/hosts

去浏览器上web登录下镜像仓库,添加一个Organization,名字为ocp4用于存放镜像,然后添加一个Repository名字为openshift4

纵云梯节点配置 配置registry 的 hosts 1 2 grep -qw 'registry.openshift4.example.com' /etc/hosts || echo '10.225.45.226 registry.openshift4.example.com' >> /etc/hosts

二进制文件和 secret.json 准备 拉取镜像是在翻墙的节点上使用的openshift client的 oc 二进制 cli ,该命令大部分的子命令在操作集群的时候和 kubectl 是一致的。此节需要我们在纵云梯节点上执行oc 命令执行去把镜像拉取推送到本地的 registry 上。我们先准备拉取镜像用到的一些前置文件

首先是 oc 下载,很多机器都需要,官方说 这个页面 下载,我们也可以去mirror页面 下载。解压后放到系统的 $PATH 里

1 2 3 wget https://mirror.openshift.com/pub/openshift-v4/clients/ocp/stable-4.5/openshift-client-linux-4.5.9.tar.gz tar zxvf openshift-client-linux-4.5.9.tar.gz mv oc kubectl /usr/local/bin

安装一些基础小工具,jq 用来格式化 json 文件,chrony 用于时间同步

1 2 3 4 5 6 7 8 9 yum install -y epel-release && \ yum install \ jq \ bind-utils \ tcpdump \ chrony \ httpd-tools \ dos2unix \ strace

准备拉取镜像权限认证文件,很多镜像在好几个镜像仓库上,有授权信息才能拉取。 从 Red Hat OpenShift Cluster Manager 站点的 Pull Secret 页面下载 registry.redhat.io 的 pull secret

把文件或者内容整上去后,格式化下json

1 jq . pull-secret.txt > pull-secret.json

大致下面的内容

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 { "auths" : { "cloud.openshift.com" : { "auth" : "b3BlbnNo..." , "email" : "you@example.com" } , "quay.io" : { "auth" : "b3BlbnNo..." , "email" : "you@example.com" } , "registry.connect.redhat.com" : { "auth" : "NTE3Njg5Nj..." , "email" : "you@example.com" } , "registry.redhat.io" : { "auth" : "NTE3Njg5Nj..." , "email" : "you@example.com" } } }

把前面 quay 的用户名和密码按照user:pass base64 加密了,例如echo -n admin:openshift | base64, 然后符合 json 的格式要求下把镜像仓库和 auth 信息追加到pull-secret.json里

1 2 3 4 5 ... "registry.openshift4.example.com" : { "auth" : "......==" } ...

增加后用 jq . pull-secret.json 检验下 json 格式是否正确

下面利用变量拼接一些镜像tag来同步

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 # 4.5.9 OCP_VERSION=$(oc version -o json | jq -r .releaseClientVersion) # 4.5.9-x86_64 OCP_RELEASE=$OCP_VERSION-$(arch) # 4 OCP_MAJOR=${OCP_VERSION%%.*} LOCAL_REGISTRY='registry.openshift4.example.com' # 对应前面的 ocp4/openshift4 LOCAL_REPOSITORY="ocp${OCP_MAJOR}/openshift${OCP_MAJOR}" PRODUCT_REPO='openshift-release-dev' RELEASE_NAME="ocp-release" LOCAL_SECRET_JSON='pull-secret.json'

OCP_RELEASE : OCP 版本,可以在这个页面 查看。如果版本不对,下面执行 oc adm 时会提示 image does not exist。LOCAL_REGISTRY : 本地仓库的域名和端口。LOCAL_REPOSITORY : 镜像存储库名称,使用 ocp4/openshift4。PRODUCT_REPO 和 RELEASE_NAME 都不需要改,这些都是一些版本特征,保持不变即可。LOCAL_SECRET_JSON : 密钥路径,就是上面 pull-secret.json 的存放路径。

同步镜像 同步镜像分为两种方式,一种是实时使用 oc 命令把镜像转发到 LOCAL_REGISTRY,一种是离线存储为文件,然后把文件拿到内网去用 oc 命令推送到内网的仓库。

一些后续可研究的东西:官方这个文档 里的命令解开镜像去查看镜像列表:

1 2 3 4 5 6 7 8 # 查看镜像info oc adm release info -a ${LOCAL_SECRET_JSON}\ quay.io/openshift-release-dev/ocp-release:4.5.9-x86_64 # 解开到本地查看 oc image extract -a ${LOCAL_SECRET_JSON} \ quay.io/openshift-release-dev/ocp-release:4.5.9-x86_64 \ --path /:/tmp/release

如果想解开这个镜像研究的话,我已经把这个镜像同步到阿里上了

1 2 3 skopeo copy docker://quay.io/openshift-release-dev/ocp-release:4.5.9-x86_64 \ docker://registry.aliyuncs.com/openshift-release-dev/ocp-release:4.5.9-x86_64 \ --insecure-policy

实时转发到 LOCAL_REGISTRY 添加registry的hosts到同步的机器上

1 2 grep -qw 'registry.openshift4.example.com' /etc/hosts || echo '10.225.45.226 registry.openshift4.example.com' >> /etc/hosts

执行同步命令

1 2 3 4 5 6 # 这里之前打算同步到阿里云仓库上,但是一直报错,以后有空的时候再研究下看怎么同步到阿里的镜像仓库上 oc adm -a ${LOCAL_SECRET_JSON} release mirror \ --from=quay.io/${PRODUCT_REPO}/${RELEASE_NAME}:${OCP_RELEASE} \ --to=${LOCAL_REGISTRY}/${LOCAL_REPOSITORY} \ --to-release-image=${LOCAL_REGISTRY}/${LOCAL_REPOSITORY}:${OCP_RELEASE} \ --insecure # 镜像仓库是自签名证书,所以加这个选项

下面是输出,可以临时保存下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 info: Mirroring 110 images to registry.openshift4.example.com/ocp4/openshift4 ... registry.openshift4.example.com/ ocp4/openshift4 manifests: sha256:00edb6c1dae03e1870e1819b4a8d29b655fb6fc40a396a0db2d7c8a20bd8ab8d -> 4.5.9-local-storage-static-provisioner sha256:0259aa5845ce43114c63d59cedeb71c9aa5781c0a6154fe5af8e3cb7bfcfa304 -> 4.5.9-machine-api-operator sha256:07f11763953a2293bac5d662b6bd49c883111ba324599c6b6b28e9f9f74112be -> 4.5.9-cluster-kube-storage-version-migrator-operator sha256:08068503746a2dfd4dcaf61dca44680a9a066960cac7b1e81ea83e1f157e7e8c -> 4.5.9-service-ca-operator sha256:09a7dea10cd584c6048f8df3dcec67dd9a8432eb44051353e180dfeb350c6310 -> 4.5.9-cluster-node-tuning-operator sha256:09c763b2ad50711c2b1b5f26c9c70875f821f460e7214f1b11c4be8374424f42 -> 4.5.9-kube-client-agent sha256:1140eff8442d5ac679a3a85655723cd51f12a63c0c8d61251214ff952cc40b06 -> 4.5.9-container-networking-plugins sha256:13179789b2e15ecf749f5ab51cf11756e2831bc019c02ed0659182e805e725dd -> 4.5.9-cluster-etcd-operator sha256:14f97b5e9195c8f9b682523dc1febbb1f75fd60d408ac2731cdfa047aee0f43d -> 4.5.9-must-gather sha256:15be0e6de6e0d7bec726611f1dcecd162325ee57b993e0d886e70c25a1faacc3 -> 4.5.9-openshift-controller-manager sha256:167c14d56714982360fbaa72b3eb3f8d4fb6c5ad10bbbb7ea396606d8412b7eb -> 4.5.9-sdn sha256:1960c492ce80345e03d50c6cada3d37341ca604d891c48b03109de525e99997a -> 4.5.9-tests sha256:1c24c01e807700d30430ce4ff2f77b54d55910cfbf9ee0cbe5c1c64979547463 -> 4.5.9-prometheus-operator sha256:1cf4b3ce90933a7dbef9d01ebcb00a7f490dce7c44de6ddb16ddebad07de8eda -> 4.5.9-k8s-prometheus-adapter sha256:1d73207be4fbba735dbb53facd23c2359886a9194df3267fd91a843a7ba10316 -> 4.5.9-console-operator sha256:203ab5955dbcbc5f3334e1d7f2ba9fac5f7a1e187370e88c08ad2996a8507711 -> 4.5.9-aws-pod-identity-webhook sha256:21fdd7329f0d9d85acb935223915fbb3af07d4ea051678fc8ec85e47ab95edb1 -> 4.5.9-mdns-publisher sha256:22e47de98549ac9e792f06d4083b5d99db3ecc8dc4c94c8950dba9756a75dc28 -> 4.5.9-grafana sha256:2527b9f6712b8551cbcec637fc87bb9640973e9f9f4972d489c5308ef25eeed0 -> 4.5.9-jenkins sha256:273585703f8f56c980ff77f9a71e6e380d8caa645c4101525572906e0820e8fc -> 4.5.9-docker-builder sha256:27774942e605c4d4b13da7721c75135aac27db8409862625b76f5b31eb2e0d63 -> 4.5.9-keepalived-ipfailover sha256:2899afae1d0d9baa0309944dd55975a195a2cbd211f9ecf574e39287827fa684 -> 4.5.9-ovirt-machine-controllers sha256:289d792eada5d1460d69a927dff31328b5fcb1f1d2e31eb7a3fd69afd9a118fc -> 4.5.9-kuryr-cni sha256:2b40c4a5cb2a9586ec6c8e43e3013e6ee97781399b2fa836cf8d6c181ff8430c -> 4.5.9-jenkins-agent-maven sha256:2be07ebc1d005decbb8e624e9beb4d282900f42eb4f8ec0b76e31daedd8874cc -> 4.5.9-installer-artifacts sha256:3161e52e7bbbf445170c4992d5a47ed87c1d026a7f94b8d0cd30c4508fb48643 -> 4.5.9-oauth-server sha256:36e7c99acba49c22c7b84a339bfdab820fae17dec07cb4a17db18617b1d46a37 -> 4.5.9-kube-etcd-signer-server sha256:39f8b615e82b3a3a087d6f7d301303d8a0862e4ca13e8f99e6ad968a04985f80 -> 4.5.9-cli sha256:3b893950a9db5aa3653d80b05b236e72c168ff368d1c2e0192dd6036486eb715 -> 4.5.9-cluster-version-operator sha256:3bbdc631a9271d45960e9db061bde9bc87c6b1df3ec58a17f81dd7326065b4dd -> 4.5.9-prom-label-proxy sha256:3c45fb79851e8498666470739c452535d16fde408f54461cc3e239608ae55943 -> 4.5.9-ironic-ipa-downloader sha256:3ec062eef4a908c7ce7ee01222a641505f9ccb45e4864f4d3c40a2c3160928f2 -> 4.5.9-azure-machine-controllers sha256:416ad8f3ddd49adae4f7db66f8a130319084604296c076c2a6d22264a5688d65 -> 4.5.9-cluster-bootstrap sha256:43fdf850ddbbad7b727eff2850d1886a69b6efca837ff8a35d097c1f15f0922c -> 4.5.9-aws-machine-controllers sha256:45ec998707309535437f03a2f361ea4c660744c41926258f6a42b566228fe59a -> 4.5.9-jenkins-agent-nodejs sha256:486ed094bac19bd3be37eff728596319f73e5648cfb49742ef8c1a859db15e55 -> 4.5.9-operator-lifecycle-manager sha256:4a547f79252b06c0fd9fa112d4bca0cd2e5958f471e9a872e33f4e917c1ebccd -> 4.5.9-cluster-update-keys sha256:4b15cf622173dedc8ab30dd1d81c7a4ffe2317fce9fd179bb26b04167604bfab -> 4.5.9-kube-state-metrics sha256:4d10b546ad2eb8bf67b50217e441d3b01d219ceb96bdf31855a5199fc23c29c7 -> 4.5.9-cluster-autoscaler-operator sha256:4d4f72c1556d2085c55691bddf5fadea7bb14ba980fdc28f02146b090134f3a6 -> 4.5.9-cluster-svcat-apiserver-operator sha256:4f99f1a5d8a5b7789016316ab33319e5d8b1b27b42ccfb974eb98b27968683b5 -> 4.5.9-prometheus-alertmanager sha256:521721a7d0d298eb361d550c08f682942323a68d07e580450e2a64cbfab54880 -> 4.5.9-baremetal-machine-controllers sha256:52a566dabf19f82f3fba485807784bd34b2b6a03539d0a2c471ea283ee601e62 -> 4.5.9-openstack-machine-controllers sha256:5f77b35d6095068e685cd389f5aeea4b3fad82e771957663839baaa331859eee -> 4.5.9-libvirt-machine-controllers sha256:62b44f524d9b820855629cb01bc8c7fff79a039a72f3b421761e1a65ea621b13 -> 4.5.9-baremetal-runtimecfg sha256:65dc7fc90223061deec270ecdfa365d509950a1efa4b9025c9355d02b8c22f9b -> 4.5.9-cluster-machine-approver sha256:66e8675a73707d519467b3598259587de325315e186a839c233f3659f58be534 -> 4.5.9-ironic sha256:6b4bc1c8fa3e762d70a837f63f660e4ff3e129015d35c82a7b6da0c6fb7919da -> 4.5.9-kube-rbac-proxy sha256:708de132d6a5a6c15c0b7f04f572cd85ff67b1733c5235d74b74f3a690a98ce7 -> 4.5.9-ironic-machine-os-downloader sha256:70c3c46df383aa123ffd0a09f18afcc92e894b01cb9edc4e42cdac9b4a092fa1 -> 4.5.9-cluster-svcat-controller-manager-operator sha256:74c4a3c93c7fba691195dec0190a47cf194759b381d41045a52b6c86aa4169c4 -> 4.5.9-cluster-ingress-operator sha256:755c6cd68730ad7f72950be4110c79b971403864cb9bf8f211edf4d35858b2e6 -> 4.5.9-multus-cni sha256:792a91df9bb7c4fb49f01c0a70479cc356aa324082ad869bf141a7ff98f51f5a -> 4.5.9-deployer sha256:7a56d91d1e0aeddc46dce7fcfda56d4430321e14563e10207893a360a87d3111 -> 4.5.9-ironic-inspector sha256:7ad540594e2a667300dd2584fe2ede2c1a0b814ee6a62f60809d87ab564f4425 -> 4.5.9-x86_64 sha256:80cb8dd15ae02c81ba09387560eb3edb8a12645b3a3179620038df340f51bd54 -> 4.5.9-thanos sha256:84cb2378e2115c1fba70f6cb7ccf053ad848a2038a38c2f5ab45a2e5f21d871c -> 4.5.9-console sha256:87037e2988ca6fee330729017c4ecb164f1a6f68e9eb4edacc976c3efb9515fd -> 4.5.9-insights-operator sha256:8bd68e0f18cd6ba0a6f12848f3edb630fcca9090a272aa58e3818a6382067e52 -> 4.5.9-kuryr-controller sha256:8c25f463d04079a8a93791c307ff893264855d60bbb8ba7a54179d9d44fc2f9f -> 4.5.9-openshift-state-metrics sha256:8ee14d942d7a971f52147b15fbd706844e250bd6c72c42150d92fc53cc826111 -> 4.5.9-prometheus-config-reloader sha256:95e63453871b0aca40375be741597363cb8b4393a2d59b2924bb4a4123b4835e -> 4.5.9-csi-snapshot-controller sha256:9bf5e6781444c43939ccf52f4d69fa163dd0faf874bff3c0adb2f259780f2b47 -> 4.5.9-ovn-kubernetes sha256:9d7cf54cc50ab837ec797954ff49c0d6c9989e1b3079431199026203f512daf9 -> 4.5.9-cluster-openshift-controller-manager-operator sha256:a14dc3297e6ea3098118c920275fc54aeb29d2f490e5023f9cbb37b6af05be81 -> 4.5.9-cluster-dns-operator sha256:a25790af4a004de3183a44b240dd3a3138749fae3ffa4ac41cca94319f614213 -> 4.5.9-openshift-apiserver sha256:a48d986d1731609226d8f6876c7cef21e8cefccd4e04c000f255b1f6f908a5ea -> 4.5.9-cluster-csi-snapshot-controller-operator sha256:a51adfc033493814df1a193b0de547749e052cb0811edacc05b878e1fef167b8 -> 4.5.9-etcd sha256:a7433426912aa9ff24b63105c5799d47614978ee80a4a241f5e9f31e8e588710 -> 4.5.9-cluster-network-operator sha256:aa73c07c74838b11b6150c6907ab2c40c86a40eb63bf2c59db881815ab3553c5 -> 4.5.9-coredns sha256:adc3e7c9ab16095165261f0feef990bb829ccf57ae506dadf3734cee0801057a -> 4.5.9-cluster-image-registry-operator sha256:af0f67519dbd7ffe2732d89cfa342ee55557f0dc5e8ee8c674eed5ff209bb15a -> 4.5.9-machine-os-content sha256:b05f9e685b3f20f96fa952c7c31b2bfcf96643e141ae961ed355684d2d209310 -> 4.5.9-baremetal-installer sha256:b1f97ac926075c15a45aac7249e03ff583159b6e03c429132c3111ed5302727b -> 4.5.9-multus-admission-controller sha256:b7261a317a4bdd681f8812c2022ff7f391a606f2b80a1c068363c5675abd4363 -> 4.5.9-cluster-samples-operator sha256:bb64c463c4b999fbd124a772e3db56f27c74b1e033052c737b69510fc1b9e590 -> 4.5.9-pod sha256:bc6c8fd4358d3a46f8df4d81cd424e8778b344c368e6855ed45492815c581438 -> 4.5.9-hyperkube sha256:bcd6cd1559b62e4a8031cf0e1676e25585845022d240ac3d927ea47a93469597 -> 4.5.9-machine-config-operator sha256:bcd799425dbdbc7361a44c8bfdc7e6cc3f6f31d59ac18cbea14f3dce8de12d62 -> 4.5.9-cluster-policy-controller sha256:bf0d5fd64ab53dfdd477c90a293f3ec90379a22e8b356044082e807565699863 -> 4.5.9-cloud-credential-operator sha256:c011c86ee352f377bbcaa46521b731677e81195240cdd4faba7a25a679f00530 -> 4.5.9-cluster-autoscaler sha256:c3fc77b6c7ab6e1e2593043532d2a9076831915f2da031fa10aa62bdfbbd7ba8 -> 4.5.9-prometheus-node-exporter sha256:c492193a82adfb303b1ac27e48a12cc248c13d120909c98ea19088debe47fd8d -> 4.5.9-kube-storage-version-migrator sha256:c4fee4a9d551caa8bfa8cca0a1fb919408a86451bccb3649965c09dcab068a88 -> 4.5.9-operator-marketplace sha256:c516f6fa1dd2bf3cca769a32eae08fd027250d56361abb790910c9a18f9fcf07 -> 4.5.9-cluster-node-tuned sha256:c93a0de1e4cb3f04e37d547c1b81a2a22c4d9d01c013374c466ed3fd0416215a -> 4.5.9-cluster-config-operator sha256:ca556d4515818e3e10d2e771d986784460509146ea9dd188fb8ba6f6ac694132 -> 4.5.9-cluster-kube-controller-manager-operator sha256:cca683b844f3e73bb8160e27b986762e77601831eca77e2eea6d94a499959869 -> 4.5.9-multus-route-override-cni sha256:cd1f7e40de6a170946faea98f94375f5aae2d9868c049f9ed00fb4f07b32d775 -> 4.5.9-kube-proxy sha256:d014f98f1d9a5a6f7e70294830583670d1b17892d38bc8c009ec974f12599bff -> 4.5.9-tools sha256:d0d21ae3e27140e1fa13b49d6b2883a0f1466d8e47a2a4839f22de80668d5c95 -> 4.5.9-haproxy-router sha256:d11eff4148b733de49e588cfe4c002b5fdd3dea5caea4a7a3a1087390782e56a -> 4.5.9-cluster-openshift-apiserver-operator sha256:d72cbba07de1dacfe3669e11fa4f4efa2c7b65664899923622b0ca0715573671 -> 4.5.9-telemeter sha256:d8725a2f93d0df184617a41995ee068914ca5a155cdf11c304553abcc4c3100a -> 4.5.9-cli-artifacts sha256:db0794d279028179c45791b0dc7ff22f2172d2267d85e0a37ef2a26ffda9c642 -> 4.5.9-cluster-storage-operator sha256:dbca81e9b4055763f422c22df01a77efba1dca499686a24210795f2ffddf20c9 -> 4.5.9-docker-registry sha256:ddb26e047d0e0d7b11bdb625bd7a941ab66f7e1ef5a5f7455d8694e7ba48989d -> 4.5.9-cluster-kube-scheduler-operator sha256:e1d1cea1cf52358b3e91ce8176b83ad36b6b28a226ee6f356fa11496396d93ec -> 4.5.9-cluster-authentication-operator sha256:e34e4f3b0097db265b40d627c2cc7b4ddd953a30b7dd283e2ec4bbe5c257a49e -> 4.5.9-configmap-reloader sha256:e4758039391099dc1b0265099474113dcd8bcce84a1c92d02c1ef760793079e6 -> 4.5.9-cluster-kube-apiserver-operator sha256:e4aae960b36e292b9807f9dc6f3feb57b62cc6a91f9349f983468a1102dc01a7 -> 4.5.9-multus-whereabouts-ipam-cni sha256:e8e8da1a4d743770940708c84408dc6188e4df2104c014160e051ef4a8c51b04 -> 4.5.9-baremetal-operator sha256:ea61c3e635bbaacd3b0833cf1983f25d3fc6eddc306230bf2703334ba61c1c26 -> 4.5.9-gcp-machine-controllers sha256:ec29896354cb1f651142e1c3793890fb59e5252955cb5706cca0e6917e888a61 -> 4.5.9-oauth-proxy sha256:ec3a914142ee8dfa01b7a407f7909ff766c210c44a33d4fa731f5547fdb65796 -> 4.5.9-ironic-static-ip-manager sha256:ee00cecd8084aac097d0b0ee2033d7931467d92f00643c6434ce6620d643cf35 -> 4.5.9-operator-registry sha256:eee108b303058e74c75b5cf16aeefaeb9d0972cd25cf09e0d33ef3213dcd50d0 -> 4.5.9-cluster-monitoring-operator sha256:f6b9d00a5dbde8a772b0709aa4ae7d686aa2b645a2a6fbee85eebe15925f8fbb -> 4.5.9-prometheus sha256:f70fdff00e09230af556265af72a87fbc22de2cf0f6447ebc8205baaca02fae2 -> 4.5.9-installer sha256:fbc84979fec952728014a8551f6cb0deb436f19aa288b8e03803ae370fc3c911 -> 4.5.9-ironic-hardware-inventory-recorder stats: shared=0 unique=0 size=0B phase 0: registry.openshift4.example.com ocp4/openshift4 blobs=0 mounts=0 manifests=110 shared=0 info: Planning completed in 22.31s sha256:ec3a914142ee8dfa01b7a407f7909ff766c210c44a33d4fa731f5547fdb65796 registry.openshift4.example.com/ocp4/openshift4:4.5.9-ironic-static-ip-manager sha256:f6b9d00a5dbde8a772b0709aa4ae7d686aa2b645a2a6fbee85eebe15925f8fbb registry.openshift4.example.com/ocp4/openshift4:4.5.9-prometheus sha256:65dc7fc90223061deec270ecdfa365d509950a1efa4b9025c9355d02b8c22f9b registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-machine-approver sha256:9d7cf54cc50ab837ec797954ff49c0d6c9989e1b3079431199026203f512daf9 registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-openshift-controller-manager-operator sha256:07f11763953a2293bac5d662b6bd49c883111ba324599c6b6b28e9f9f74112be registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-kube-storage-version-migrator-operator sha256:3bbdc631a9271d45960e9db061bde9bc87c6b1df3ec58a17f81dd7326065b4dd registry.openshift4.example.com/ocp4/openshift4:4.5.9-prom-label-proxy sha256:bc6c8fd4358d3a46f8df4d81cd424e8778b344c368e6855ed45492815c581438 registry.openshift4.example.com/ocp4/openshift4:4.5.9-hyperkube sha256:167c14d56714982360fbaa72b3eb3f8d4fb6c5ad10bbbb7ea396606d8412b7eb registry.openshift4.example.com/ocp4/openshift4:4.5.9-sdn sha256:486ed094bac19bd3be37eff728596319f73e5648cfb49742ef8c1a859db15e55 registry.openshift4.example.com/ocp4/openshift4:4.5.9-operator-lifecycle-manager sha256:c011c86ee352f377bbcaa46521b731677e81195240cdd4faba7a25a679f00530 registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-autoscaler sha256:e4aae960b36e292b9807f9dc6f3feb57b62cc6a91f9349f983468a1102dc01a7 registry.openshift4.example.com/ocp4/openshift4:4.5.9-multus-whereabouts-ipam-cni sha256:ea61c3e635bbaacd3b0833cf1983f25d3fc6eddc306230bf2703334ba61c1c26 registry.openshift4.example.com/ocp4/openshift4:4.5.9-gcp-machine-controllers sha256:bf0d5fd64ab53dfdd477c90a293f3ec90379a22e8b356044082e807565699863 registry.openshift4.example.com/ocp4/openshift4:4.5.9-cloud-credential-operator sha256:ddb26e047d0e0d7b11bdb625bd7a941ab66f7e1ef5a5f7455d8694e7ba48989d registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-kube-scheduler-operator sha256:cd1f7e40de6a170946faea98f94375f5aae2d9868c049f9ed00fb4f07b32d775 registry.openshift4.example.com/ocp4/openshift4:4.5.9-kube-proxy sha256:adc3e7c9ab16095165261f0feef990bb829ccf57ae506dadf3734cee0801057a registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-image-registry-operator sha256:eee108b303058e74c75b5cf16aeefaeb9d0972cd25cf09e0d33ef3213dcd50d0 registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-monitoring-operator sha256:09c763b2ad50711c2b1b5f26c9c70875f821f460e7214f1b11c4be8374424f42 registry.openshift4.example.com/ocp4/openshift4:4.5.9-kube-client-agent sha256:4d10b546ad2eb8bf67b50217e441d3b01d219ceb96bdf31855a5199fc23c29c7 registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-autoscaler-operator sha256:80cb8dd15ae02c81ba09387560eb3edb8a12645b3a3179620038df340f51bd54 registry.openshift4.example.com/ocp4/openshift4:4.5.9-thanos sha256:21fdd7329f0d9d85acb935223915fbb3af07d4ea051678fc8ec85e47ab95edb1 registry.openshift4.example.com/ocp4/openshift4:4.5.9-mdns-publisher sha256:87037e2988ca6fee330729017c4ecb164f1a6f68e9eb4edacc976c3efb9515fd registry.openshift4.example.com/ocp4/openshift4:4.5.9-insights-operator sha256:74c4a3c93c7fba691195dec0190a47cf194759b381d41045a52b6c86aa4169c4 registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-ingress-operator sha256:d8725a2f93d0df184617a41995ee068914ca5a155cdf11c304553abcc4c3100a registry.openshift4.example.com/ocp4/openshift4:4.5.9-cli-artifacts sha256:a25790af4a004de3183a44b240dd3a3138749fae3ffa4ac41cca94319f614213 registry.openshift4.example.com/ocp4/openshift4:4.5.9-openshift-apiserver sha256:e8e8da1a4d743770940708c84408dc6188e4df2104c014160e051ef4a8c51b04 registry.openshift4.example.com/ocp4/openshift4:4.5.9-baremetal-operator sha256:521721a7d0d298eb361d550c08f682942323a68d07e580450e2a64cbfab54880 registry.openshift4.example.com/ocp4/openshift4:4.5.9-baremetal-machine-controllers sha256:d11eff4148b733de49e588cfe4c002b5fdd3dea5caea4a7a3a1087390782e56a registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-openshift-apiserver-operator sha256:4b15cf622173dedc8ab30dd1d81c7a4ffe2317fce9fd179bb26b04167604bfab registry.openshift4.example.com/ocp4/openshift4:4.5.9-kube-state-metrics sha256:cca683b844f3e73bb8160e27b986762e77601831eca77e2eea6d94a499959869 registry.openshift4.example.com/ocp4/openshift4:4.5.9-multus-route-override-cni sha256:70c3c46df383aa123ffd0a09f18afcc92e894b01cb9edc4e42cdac9b4a092fa1 registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-svcat-controller-manager-operator sha256:a48d986d1731609226d8f6876c7cef21e8cefccd4e04c000f255b1f6f908a5ea registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-csi-snapshot-controller-operator sha256:5f77b35d6095068e685cd389f5aeea4b3fad82e771957663839baaa331859eee registry.openshift4.example.com/ocp4/openshift4:4.5.9-libvirt-machine-controllers sha256:203ab5955dbcbc5f3334e1d7f2ba9fac5f7a1e187370e88c08ad2996a8507711 registry.openshift4.example.com/ocp4/openshift4:4.5.9-aws-pod-identity-webhook sha256:aa73c07c74838b11b6150c6907ab2c40c86a40eb63bf2c59db881815ab3553c5 registry.openshift4.example.com/ocp4/openshift4:4.5.9-coredns sha256:ee00cecd8084aac097d0b0ee2033d7931467d92f00643c6434ce6620d643cf35 registry.openshift4.example.com/ocp4/openshift4:4.5.9-operator-registry sha256:e1d1cea1cf52358b3e91ce8176b83ad36b6b28a226ee6f356fa11496396d93ec registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-authentication-operator sha256:c4fee4a9d551caa8bfa8cca0a1fb919408a86451bccb3649965c09dcab068a88 registry.openshift4.example.com/ocp4/openshift4:4.5.9-operator-marketplace sha256:08068503746a2dfd4dcaf61dca44680a9a066960cac7b1e81ea83e1f157e7e8c registry.openshift4.example.com/ocp4/openshift4:4.5.9-service-ca-operator sha256:ec29896354cb1f651142e1c3793890fb59e5252955cb5706cca0e6917e888a61 registry.openshift4.example.com/ocp4/openshift4:4.5.9-oauth-proxy sha256:4f99f1a5d8a5b7789016316ab33319e5d8b1b27b42ccfb974eb98b27968683b5 registry.openshift4.example.com/ocp4/openshift4:4.5.9-prometheus-alertmanager sha256:e4758039391099dc1b0265099474113dcd8bcce84a1c92d02c1ef760793079e6 registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-kube-apiserver-operator sha256:ca556d4515818e3e10d2e771d986784460509146ea9dd188fb8ba6f6ac694132 registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-kube-controller-manager-operator sha256:b05f9e685b3f20f96fa952c7c31b2bfcf96643e141ae961ed355684d2d209310 registry.openshift4.example.com/ocp4/openshift4:4.5.9-baremetal-installer sha256:22e47de98549ac9e792f06d4083b5d99db3ecc8dc4c94c8950dba9756a75dc28 registry.openshift4.example.com/ocp4/openshift4:4.5.9-grafana sha256:84cb2378e2115c1fba70f6cb7ccf053ad848a2038a38c2f5ab45a2e5f21d871c registry.openshift4.example.com/ocp4/openshift4:4.5.9-console sha256:0259aa5845ce43114c63d59cedeb71c9aa5781c0a6154fe5af8e3cb7bfcfa304 registry.openshift4.example.com/ocp4/openshift4:4.5.9-machine-api-operator sha256:1d73207be4fbba735dbb53facd23c2359886a9194df3267fd91a843a7ba10316 registry.openshift4.example.com/ocp4/openshift4:4.5.9-console-operator sha256:27774942e605c4d4b13da7721c75135aac27db8409862625b76f5b31eb2e0d63 registry.openshift4.example.com/ocp4/openshift4:4.5.9-keepalived-ipfailover sha256:2be07ebc1d005decbb8e624e9beb4d282900f42eb4f8ec0b76e31daedd8874cc registry.openshift4.example.com/ocp4/openshift4:4.5.9-installer-artifacts sha256:15be0e6de6e0d7bec726611f1dcecd162325ee57b993e0d886e70c25a1faacc3 registry.openshift4.example.com/ocp4/openshift4:4.5.9-openshift-controller-manager sha256:c3fc77b6c7ab6e1e2593043532d2a9076831915f2da031fa10aa62bdfbbd7ba8 registry.openshift4.example.com/ocp4/openshift4:4.5.9-prometheus-node-exporter sha256:36e7c99acba49c22c7b84a339bfdab820fae17dec07cb4a17db18617b1d46a37 registry.openshift4.example.com/ocp4/openshift4:4.5.9-kube-etcd-signer-server sha256:3c45fb79851e8498666470739c452535d16fde408f54461cc3e239608ae55943 registry.openshift4.example.com/ocp4/openshift4:4.5.9-ironic-ipa-downloader sha256:b7261a317a4bdd681f8812c2022ff7f391a606f2b80a1c068363c5675abd4363 registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-samples-operator sha256:2899afae1d0d9baa0309944dd55975a195a2cbd211f9ecf574e39287827fa684 registry.openshift4.example.com/ocp4/openshift4:4.5.9-ovirt-machine-controllers sha256:95e63453871b0aca40375be741597363cb8b4393a2d59b2924bb4a4123b4835e registry.openshift4.example.com/ocp4/openshift4:4.5.9-csi-snapshot-controller sha256:e34e4f3b0097db265b40d627c2cc7b4ddd953a30b7dd283e2ec4bbe5c257a49e registry.openshift4.example.com/ocp4/openshift4:4.5.9-configmap-reloader sha256:45ec998707309535437f03a2f361ea4c660744c41926258f6a42b566228fe59a registry.openshift4.example.com/ocp4/openshift4:4.5.9-jenkins-agent-nodejs sha256:1c24c01e807700d30430ce4ff2f77b54d55910cfbf9ee0cbe5c1c64979547463 registry.openshift4.example.com/ocp4/openshift4:4.5.9-prometheus-operator sha256:d0d21ae3e27140e1fa13b49d6b2883a0f1466d8e47a2a4839f22de80668d5c95 registry.openshift4.example.com/ocp4/openshift4:4.5.9-haproxy-router sha256:3161e52e7bbbf445170c4992d5a47ed87c1d026a7f94b8d0cd30c4508fb48643 registry.openshift4.example.com/ocp4/openshift4:4.5.9-oauth-server sha256:13179789b2e15ecf749f5ab51cf11756e2831bc019c02ed0659182e805e725dd registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-etcd-operator sha256:d014f98f1d9a5a6f7e70294830583670d1b17892d38bc8c009ec974f12599bff registry.openshift4.example.com/ocp4/openshift4:4.5.9-tools sha256:792a91df9bb7c4fb49f01c0a70479cc356aa324082ad869bf141a7ff98f51f5a registry.openshift4.example.com/ocp4/openshift4:4.5.9-deployer sha256:8bd68e0f18cd6ba0a6f12848f3edb630fcca9090a272aa58e3818a6382067e52 registry.openshift4.example.com/ocp4/openshift4:4.5.9-kuryr-controller sha256:62b44f524d9b820855629cb01bc8c7fff79a039a72f3b421761e1a65ea621b13 registry.openshift4.example.com/ocp4/openshift4:4.5.9-baremetal-runtimecfg sha256:dbca81e9b4055763f422c22df01a77efba1dca499686a24210795f2ffddf20c9 registry.openshift4.example.com/ocp4/openshift4:4.5.9-docker-registry sha256:52a566dabf19f82f3fba485807784bd34b2b6a03539d0a2c471ea283ee601e62 registry.openshift4.example.com/ocp4/openshift4:4.5.9-openstack-machine-controllers sha256:8c25f463d04079a8a93791c307ff893264855d60bbb8ba7a54179d9d44fc2f9f registry.openshift4.example.com/ocp4/openshift4:4.5.9-openshift-state-metrics sha256:43fdf850ddbbad7b727eff2850d1886a69b6efca837ff8a35d097c1f15f0922c registry.openshift4.example.com/ocp4/openshift4:4.5.9-aws-machine-controllers sha256:708de132d6a5a6c15c0b7f04f572cd85ff67b1733c5235d74b74f3a690a98ce7 registry.openshift4.example.com/ocp4/openshift4:4.5.9-ironic-machine-os-downloader sha256:f70fdff00e09230af556265af72a87fbc22de2cf0f6447ebc8205baaca02fae2 registry.openshift4.example.com/ocp4/openshift4:4.5.9-installer sha256:c516f6fa1dd2bf3cca769a32eae08fd027250d56361abb790910c9a18f9fcf07 registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-node-tuned sha256:bcd799425dbdbc7361a44c8bfdc7e6cc3f6f31d59ac18cbea14f3dce8de12d62 registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-policy-controller sha256:8ee14d942d7a971f52147b15fbd706844e250bd6c72c42150d92fc53cc826111 registry.openshift4.example.com/ocp4/openshift4:4.5.9-prometheus-config-reloader sha256:09a7dea10cd584c6048f8df3dcec67dd9a8432eb44051353e180dfeb350c6310 registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-node-tuning-operator sha256:3b893950a9db5aa3653d80b05b236e72c168ff368d1c2e0192dd6036486eb715 registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-version-operator sha256:4a547f79252b06c0fd9fa112d4bca0cd2e5958f471e9a872e33f4e917c1ebccd registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-update-keys sha256:2527b9f6712b8551cbcec637fc87bb9640973e9f9f4972d489c5308ef25eeed0 registry.openshift4.example.com/ocp4/openshift4:4.5.9-jenkins sha256:1cf4b3ce90933a7dbef9d01ebcb00a7f490dce7c44de6ddb16ddebad07de8eda registry.openshift4.example.com/ocp4/openshift4:4.5.9-k8s-prometheus-adapter sha256:416ad8f3ddd49adae4f7db66f8a130319084604296c076c2a6d22264a5688d65 registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-bootstrap sha256:7ad540594e2a667300dd2584fe2ede2c1a0b814ee6a62f60809d87ab564f4425 registry.openshift4.example.com/ocp4/openshift4:4.5.9-x86_64 sha256:bcd6cd1559b62e4a8031cf0e1676e25585845022d240ac3d927ea47a93469597 registry.openshift4.example.com/ocp4/openshift4:4.5.9-machine-config-operator sha256:6b4bc1c8fa3e762d70a837f63f660e4ff3e129015d35c82a7b6da0c6fb7919da registry.openshift4.example.com/ocp4/openshift4:4.5.9-kube-rbac-proxy sha256:a51adfc033493814df1a193b0de547749e052cb0811edacc05b878e1fef167b8 registry.openshift4.example.com/ocp4/openshift4:4.5.9-etcd sha256:1960c492ce80345e03d50c6cada3d37341ca604d891c48b03109de525e99997a registry.openshift4.example.com/ocp4/openshift4:4.5.9-tests sha256:66e8675a73707d519467b3598259587de325315e186a839c233f3659f58be534 registry.openshift4.example.com/ocp4/openshift4:4.5.9-ironic sha256:755c6cd68730ad7f72950be4110c79b971403864cb9bf8f211edf4d35858b2e6 registry.openshift4.example.com/ocp4/openshift4:4.5.9-multus-cni sha256:a7433426912aa9ff24b63105c5799d47614978ee80a4a241f5e9f31e8e588710 registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-network-operator sha256:a14dc3297e6ea3098118c920275fc54aeb29d2f490e5023f9cbb37b6af05be81 registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-dns-operator sha256:d72cbba07de1dacfe3669e11fa4f4efa2c7b65664899923622b0ca0715573671 registry.openshift4.example.com/ocp4/openshift4:4.5.9-telemeter sha256:7a56d91d1e0aeddc46dce7fcfda56d4430321e14563e10207893a360a87d3111 registry.openshift4.example.com/ocp4/openshift4:4.5.9-ironic-inspector sha256:db0794d279028179c45791b0dc7ff22f2172d2267d85e0a37ef2a26ffda9c642 registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-storage-operator sha256:3ec062eef4a908c7ce7ee01222a641505f9ccb45e4864f4d3c40a2c3160928f2 registry.openshift4.example.com/ocp4/openshift4:4.5.9-azure-machine-controllers sha256:273585703f8f56c980ff77f9a71e6e380d8caa645c4101525572906e0820e8fc registry.openshift4.example.com/ocp4/openshift4:4.5.9-docker-builder sha256:4d4f72c1556d2085c55691bddf5fadea7bb14ba980fdc28f02146b090134f3a6 registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-svcat-apiserver-operator sha256:9bf5e6781444c43939ccf52f4d69fa163dd0faf874bff3c0adb2f259780f2b47 registry.openshift4.example.com/ocp4/openshift4:4.5.9-ovn-kubernetes sha256:289d792eada5d1460d69a927dff31328b5fcb1f1d2e31eb7a3fd69afd9a118fc registry.openshift4.example.com/ocp4/openshift4:4.5.9-kuryr-cni sha256:af0f67519dbd7ffe2732d89cfa342ee55557f0dc5e8ee8c674eed5ff209bb15a registry.openshift4.example.com/ocp4/openshift4:4.5.9-machine-os-content sha256:14f97b5e9195c8f9b682523dc1febbb1f75fd60d408ac2731cdfa047aee0f43d registry.openshift4.example.com/ocp4/openshift4:4.5.9-must-gather sha256:c93a0de1e4cb3f04e37d547c1b81a2a22c4d9d01c013374c466ed3fd0416215a registry.openshift4.example.com/ocp4/openshift4:4.5.9-cluster-config-operator sha256:b1f97ac926075c15a45aac7249e03ff583159b6e03c429132c3111ed5302727b registry.openshift4.example.com/ocp4/openshift4:4.5.9-multus-admission-controller sha256:bb64c463c4b999fbd124a772e3db56f27c74b1e033052c737b69510fc1b9e590 registry.openshift4.example.com/ocp4/openshift4:4.5.9-pod sha256:39f8b615e82b3a3a087d6f7d301303d8a0862e4ca13e8f99e6ad968a04985f80 registry.openshift4.example.com/ocp4/openshift4:4.5.9-cli sha256:c492193a82adfb303b1ac27e48a12cc248c13d120909c98ea19088debe47fd8d registry.openshift4.example.com/ocp4/openshift4:4.5.9-kube-storage-version-migrator sha256:2b40c4a5cb2a9586ec6c8e43e3013e6ee97781399b2fa836cf8d6c181ff8430c registry.openshift4.example.com/ocp4/openshift4:4.5.9-jenkins-agent-maven sha256:00edb6c1dae03e1870e1819b4a8d29b655fb6fc40a396a0db2d7c8a20bd8ab8d registry.openshift4.example.com/ocp4/openshift4:4.5.9-local-storage-static-provisioner sha256:1140eff8442d5ac679a3a85655723cd51f12a63c0c8d61251214ff952cc40b06 registry.openshift4.example.com/ocp4/openshift4:4.5.9-container-networking-plugins sha256:fbc84979fec952728014a8551f6cb0deb436f19aa288b8e03803ae370fc3c911 registry.openshift4.example.com/ocp4/openshift4:4.5.9-ironic-hardware-inventory-recorder info: Mirroring completed in 2.27s (0B/s) Success Update image: registry.openshift4.example.com/ocp4/openshift4:4.5.9-x86_64 Mirror prefix: registry.openshift4.example.com/ocp4/openshift4 To use the new mirrored repository to install, add the following section to the install-config.yaml: imageContentSources: - mirrors: - registry.openshift4.example.com/ocp4/openshift4 source: quay.io/openshift-release-dev/ocp-v4.0-art-dev - mirrors: - registry.openshift4.example.com/ocp4/openshift4 source: quay.io/openshift-release-dev/ocp-release To use the new mirrored repository for upgrades, use the following to create an ImageContentSourcePolicy: apiVersion: operator.openshift.io/v1alpha1 kind: ImageContentSourcePolicy metadata: name: example spec: repositoryDigestMirrors: - mirrors: - registry.openshift4.example.com/ocp4/openshift4 source: quay.io/openshift-release-dev/ocp-v4.0-art-dev - mirrors: - registry.openshift4.example.com/ocp4/openshift4 source: quay.io/openshift-release-dev/ocp-release

梯子不稳定的话多执行几次,oc adm release mirror 命令执行完成后会输出下面类似的信息,保存下来,将来会用在 install-config.yaml 文件中:

1 2 3 4 5 6 7 imageContentSources: - mirrors: - registry.openshift4.example.com/ocp4/openshift4 source: quay.io/openshift-release-dev/ocp-release - mirrors: - registry.openshift4.example.com/ocp4/openshift4 source: quay.io/openshift-release-dev/ocp-v4.0-art-dev

同步到本地目录 这里说下同步到本地,假如云梯的节点和内网是不通的,所以我们需要先在纵云梯节点上把镜像存为文件,后面文件拷贝内网里去推送到内网仓库上

1 2 3 4 5 # 创建目录 mkdir mirror oc adm -a ${LOCAL_SECRET_JSON} release mirror \ --from=quay.io/${PRODUCT_REPO}/${RELEASE_NAME}:${OCP_RELEASE} \ --to-dir=mirror/

梯子不稳定的话多执行几次,这个命令支持继续上次的位置下载,下载完成后会有下面类似内容

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 phase 0: openshift/release blobs=238 mounts=0 manifests=110 shared=5 info: Planning completed in 22.61s uploading: file://openshift/release sha256:740e93718e387c4611372639d025bcde65fa084f10cd64b2fea59ac3edc3b5c2 823.3MiB uploading: file://openshift/release sha256:4908e3220585a526b87e77f88ee7ddd06c502447269792ea4013e1b2f414f41e 383.1MiB uploading: file://openshift/release sha256:b9cb5a1468a5d3b235df159d5f795d2f44bc14fbe6c3d36b28ce8a69eb545771 515.5MiB sha256:15be0e6de6e0d7bec726611f1dcecd162325ee57b993e0d886e70c25a1faacc3 file://openshift/release:4.5.9-openshift-controller-manager sha256:bc6c8fd4358d3a46f8df4d81cd424e8778b344c368e6855ed45492815c581438 file://openshift/release:4.5.9-hyperkube sha256:74c4a3c93c7fba691195dec0190a47cf194759b381d41045a52b6c86aa4169c4 file://openshift/release:4.5.9-cluster-ingress-operator sha256:bcd6cd1559b62e4a8031cf0e1676e25585845022d240ac3d927ea47a93469597 file://openshift/release:4.5.9-machine-config-operator sha256:9d7cf54cc50ab837ec797954ff49c0d6c9989e1b3079431199026203f512daf9 file://openshift/release:4.5.9-cluster-openshift-controller-manager-operator sha256:c492193a82adfb303b1ac27e48a12cc248c13d120909c98ea19088debe47fd8d file://openshift/release:4.5.9-kube-storage-version-migrator sha256:2527b9f6712b8551cbcec637fc87bb9640973e9f9f4972d489c5308ef25eeed0 file://openshift/release:4.5.9-jenkins .... info: Mirroring completed in 1h27m3.41s (345.7kB/s) Success Update image: openshift/release:4.5.9 To upload local images to a registry, run: oc image mirror --from-dir=mirror/ 'file://openshift/release:4.5.9*' REGISTRY/REPOSITORY Configmap signature file mirror/config/signature-sha256-7ad540594e2a6673.yaml created

结尾输出了把 mirror 目录下文件推送到镜像仓库的命令 oc image mirror --from-dir=mirror/ 'file://openshift/release:4.5.9*' REGISTRY/REPOSITORY,后面会用到这个命令,目录为下面情况

1 2 3 4 5 6 7 8 9 $ tree -L 4 mirror/ mirror/ ├── config │ └── signature-sha256-7ad540594e2a6673.yaml └── v2 └── openshift └── release ├── blobs └── manifests

把这些镜像目录打包成压缩包,压缩后的大概5-6G大小

1 tar zcvf mirror.tar.gz mirror/

把前面的mirror.tar.gz压缩包传过来,这里传到内网的LOCAL_REGISTRY机器上,还有pull-secret.json文件和oc命令也记得拷贝过来。文件准备好后设置下变量

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 # 4.5.9 OCP_VERSION=$(oc version -o json | jq -r .releaseClientVersion) # 4.5.9-x86_64 OCP_RELEASE=$OCP_VERSION-$(arch) # 4 OCP_MAJOR=${OCP_VERSION%%.*} LOCAL_REGISTRY='registry.openshift4.example.com' # 对应前面的 ocp4/openshift4 LOCAL_REPOSITORY="ocp${OCP_MAJOR}/openshift${OCP_MAJOR}" PRODUCT_REPO='openshift-release-dev' RELEASE_NAME="ocp-release" LOCAL_SECRET_JSON='pull-secret.json'

然后用之前下载镜像到目录的时候结尾输出的命令导入下,因为证书是非权威机构 ca 签署的,所以加--insecure

1 2 3 4 5 6 tar zxvf mirror.tar.gz oc image mirror -a ${LOCAL_SECRET_JSON} \ --from-dir=mirror/ 'file://openshift/release:4.5.9*' \ $ {LOCAL_REGISTRY}/${LOCAL_REPOSITORY} \ --insecure

导入后去镜像仓库的 web 上查看有没有下面名字的镜像

1 2 $ echo ${LOCAL_REGISTRY} /${LOCAL_REPOSITORY} :${OCP_RELEASE} registry.openshift4.example.com/ocp4/openshift4:4.5.9-x86_64

bastion bastion 提供了下列功能:

dns server: 由 coredns + etcd 提供

负载均衡: haproxy

http file download: nginx

openshift-install 二进制文件: 用它转换部署清单成 ignition 文件

oc: ocp 的 client cli,和 kubectl 一样操作 ocp 集群

如果你机器数量多,或者内网有 dns server 和负载均衡,上面这些服务没必要耦合部署在一台上,同时这些实现手段看自己掌握的工具,没必要和我用一模一样

cert for registry bastion 节点推送镜像由于非权威 ca 签署证书会报错 x509: certificate signed by unknown authority,把 registry 机器上 quay 的 ssl.cert 复制到 bastion 上,执行下面操作

1 2 cp ssl.cert /etc/pki/ca-trust/source/anchors/ssl.crt update-ca-trust extract

如果使用 Docker 登录,需要将证书复制到 docker 的信任证书路径:

1 2 3 mkdir -p /etc/docker/certs.d/registry.openshift4.example.com cp ssl.cert /etc/docker/certs.d/registry.openshift4.example.com/ssl.crt systemctl restart docker

oc && openshift-install 把 registry 上的 oc 命令拷贝过来。为了保证安装版本一致性,需要从镜像库中提取 openshift-install 二进制文件,不能直接从 https://mirror.openshift.com/pub/openshift-v4/clients/ocp/ 下载,不然后面会有 sha256 匹配不上的问题。

1 2 3 4 5 6 7 # 这一步需要用到上面的 export 变量 # 或者提前在 纵云梯节点执行然后拷贝过来 # x509: certificate relies on legacy Common Name field 下面的变量解决 GODEBUG=x509ignoreCN=0 oc adm release extract \ -a ${LOCAL_SECRET_JSON} \ --command=openshift-install \ "${LOCAL_REGISTRY}/${LOCAL_REPOSITORY}:${OCP_RELEASE}"

如果提示 error: image dose not exist,说明拉取的镜像不全,或者版本不对,可以自己去仓库的 web 上去搜下 ${LOCAL_REGISTRY}/${LOCAL_REPOSITORY}:${OCP_RELEASE} 这个镜像。

把文件移动到 $PATH 并确认版本:

1 2 3 4 5 6 7 8 9 chmod +x openshift-install mv openshift-install /usr/local/bin/ openshift-install version # 下面是输出 openshift-install 4.5.9 built from commit 0d5c871ce7d03f3d03ab4371dc39916a5415cf5c release image registry.openshift4.example.com/ocp4/openshift4@sha256:7ad540594e2a667300dd2584fe2ede2c1a0b814ee6a62f60809d87ab564f4425

dns server 按照官方文档要求,集群里大部分组件之间通信都是用 dns ,所以我们这里得部署一个 dns server,官方以前 3.x.x 的时候使用 ansible 部署的named提供,网上也有人用 dnsmasq,这里使用 docker-compose 起 coredns 作为 dns server,由于这里需要添加 SRV 记录,所以需要 CoreDNS 结合 etcd 插件使用

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 mkdir -p /data/coredns cd /data/coredns cat> docker-compose.yml<<'EOF' version: '3.2' services: coredns: image: coredns/coredns:1.7.0 container_name: coredns restart: always ports: - "53:53/udp" - "53:53/tcp" - "9153:9153/tcp" cap_drop: - ALL cap_add: - NET_BIND_SERVICE volumes: - /data/coredns/config/:/etc/coredns/ - /etc/localtime:/etc/localtime:ro command: ["-conf", "/etc/coredns/Corefile"] depends_on: - coredns-etcd networks: coredns: aliases: - coredns logging: driver: json-file options: max-file: '3' max-size: 100m coredns-etcd: #image: quay.io/coreos/etcd:v3.4.13 image: registry.aliyuncs.com/k8sxio/etcd:3.4.13-0 container_name: coredns-etcd restart: always volumes: - /data/coredns/etcd/data:/var/lib/etcd:Z - /data/coredns/etcd/conf:/etc/etcd:Z - /etc/localtime:/etc/localtime:ro command: ["/usr/local/bin/etcd", "--config-file=/etc/etcd/etcd.config.yml"] networks: coredns: aliases: - etcd logging: driver: json-file options: max-file: '3' max-size: 100m networks: coredns: name: coredns external: false EOF

创建相关目录

1 2 3 4 5 6 mkdir -p /data/coredns/config/ \ /data/coredns/etcd/data \ /data/coredns/etcd/conf # etcd 3.4.10后data目录权限必须是0700 chmod 0700 /data/coredns/etcd/data

coredns 创建 coredns 的配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 cat > /data/coredns/config/Corefile <<'EOF' .:53 { # 监听 TCP 和 UDP 的 53 端口 template IN A apps.openshift4.example.com { match .*apps\.openshift4\.example\.com # 匹配请求 DNS 名称的正则表达式 answer "{{ .Name }} 60 IN A 10.225.45.250" # DNS 应答 fallthrough } etcd { # 配置启用 etcd 插件,后面可以指定域名,例如 etcd test.com { path /skydns # etcd 里面的路径 默认为 /skydns,以后所有的 dns 记录都存储在该路径下 endpoint http://etcd:2379 # etcd 访问地址,这里是容器里,所以写alias域名,多个则空格分开 fallthrough # 如果区域匹配但不能生成记录,则将请求传递给下一个插件 # tls CERT KEY CACERT # 可选参数,etcd 认证证书设置 } prometheus # 监控插件,开启metrics cache 160 reload loadbalance # 负载均衡,开启 DNS 记录轮询策略 forward . 114.114.114.114 #上游 dns server,多个的话空格隔开 log # 打印日志 } EOF

这里配置了一个通配符解析就是给 router 使用的(ocp的 ingress controller),有条件可以硬件F5,real server 则写所有 router 所在的 node ip,然后 vhost的通配符域名解析的 ip 写 F5 的 ip。也可以 keepalived 漂个 VIP 做,这里偷懒使用 基础节点,因为基础节点上有 haproxy 作为负载均衡,会反向代理 集群里 hostNetwork 的 router-default 的端口

forward 的上游可以看宿主机 /etc/resolv.conf 上的 nameserver 段填写

etcd 创建 etcd 的配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 cat > /data/coredns/etcd/conf/etcd.config.yml <<'EOF' name: coredns-etcd data-dir: /var/lib/etcd wal-dir: /var/lib/etcd/wal auto-compaction-mode: periodic auto-compaction-retention: "1" snapshot-count: 5000 heartbeat-interval: 100 election-timeout: 1000 quota-backend-bytes: 0 listen-peer-urls: 'http://127.0.0.1:2380' listen-client-urls: 'http://0.0.0.0:2379' max-snapshots: 3 max-wals: 5 cors: initial-advertise-peer-urls: 'http://127.0.0.1:2380' advertise-client-urls: 'http://0.0.0.0:2379' discovery: discovery-fallback: 'proxy' discovery-proxy: discovery-srv: initial-cluster: 'coredns-etcd=http://127.0.0.1:2380' #和上面的name一致 initial-cluster-token: 'etcd-coredns' initial-cluster-state: 'new' strict-reconfig-check: false enable-v2: false enable-pprof: true proxy: 'off' proxy-failure-wait: 5000 proxy-refresh-interval: 30000 proxy-dial-timeout: 1000 proxy-write-timeout: 5000 proxy-read-timeout: 0 force-new-cluster: false EOF

config for dns 确保宿主机上的53没有其他 dns server 进程使用

验证下解析

1 2 3 4 5 $ dig +short apps.openshift4.example.com @127.0.0.1 10.225.45.250 $ dig +short x.apps.openshift4.example.com @127.0.0.1 10.225.45.250

然后根据集群的 ip 添加解析记录

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 alias etcdctlv3='docker exec -e ETCDCTL_API=3 coredns-etcd etcdctl' etcdctlv3 put /skydns/com/example/openshift4/api '{"host":"10.225.45.250","ttl":60}' etcdctlv3 put /skydns/com/example/openshift4/api-int '{"host":"10.225.45.250","ttl":60}' # 这里默认etcd是部署在master节点上 etcdctlv3 put /skydns/com/example/openshift4/etcd-0 '{"host":"10.225.45.251","ttl":60}' etcdctlv3 put /skydns/com/example/openshift4/etcd-1 '{"host":"10.225.45.252","ttl":60}' etcdctlv3 put /skydns/com/example/openshift4/etcd-2 '{"host":"10.225.45.222","ttl":60}' # 加密的etcd域名SRV记录,这里我是只有一个master,所以这里只写一个 etcdctlv3 put /skydns/com/example/openshift4/_tcp/_etcd-server-ssl/x1 '{"host":"etcd-0.openshift4.example.com","ttl":60,"priority":0,"weight":10,"port":2380}' etcdctlv3 put /skydns/com/example/openshift4/_tcp/_etcd-server-ssl/x2 '{"host":"etcd-1.openshift4.example.com","ttl":60,"priority":0,"weight":10,"port":2380}' etcdctlv3 put /skydns/com/example/openshift4/_tcp/_etcd-server-ssl/x3 '{"host":"etcd-2.openshift4.example.com","ttl":60,"priority":0,"weight":10,"port":2380}' # 除此之外再添加各节点主机名记录 etcdctlv3 put /skydns/com/example/openshift4/bootstrap '{"host":"10.225.45.223","ttl":60}' etcdctlv3 put /skydns/com/example/openshift4/master1 '{"host":"10.225.45.251","ttl":60}' etcdctlv3 put /skydns/com/example/openshift4/master2 '{"host":"10.225.45.252","ttl":60}' etcdctlv3 put /skydns/com/example/openshift4/master3 '{"host":"10.225.45.222","ttl":60}' # 这里bootstrap后面用来当作worker用,所以ip写bootstrap etcdctlv3 put /skydns/com/example/openshift4/worker1 '{"host":"10.225.45.223","ttl":60}' # 镜像节点的域名 etcdctlv3 put /skydns/com/example/openshift4/registry '{"host":"10.225.45.226","ttl":60}'

查看所有记录

1 etcdctlv3 get /skydns --prefix

validate for dns 验证dns,自己比对输出看ip是否正确

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 yum install -y bind-utils dig +short api.openshift4.example.com @127.0.0.1 dig +short api-int.openshift4.example.com @127.0.0.1 dig +short etcd-0.openshift4.example.com @127.0.0.1 dig +short etcd-1.openshift4.example.com @127.0.0.1 dig +short etcd-2.openshift4.example.com @127.0.0.1 dig +short bootstrap.openshift4.example.com @127.0.0.1 dig +short master1.openshift4.example.com @127.0.0.1 dig +short master2.openshift4.example.com @127.0.0.1 dig +short master3.openshift4.example.com @127.0.0.1 dig +short worker1.openshift4.example.com @127.0.0.1 dig +short -t SRV _etcd-server-ssl._tcp.openshift4.example.com @127.0.0.1 10 33 2380 etcd-0.openshift4.example.com. 10 33 2380 etcd-1.openshift4.example.com. 10 33 2380 etcd-2.openshift4.example.com.

然后我们改下系统的resolv.conf,使用 coredns 作为dns,这样我们不用去写 hosts

1 2 3 4 5 cp -a /etc/resolv.conf /etc/resolv.conf.bak cat >/etc/resolv.conf<<'EOF' search openshift4.example.com nameserver 10.225.45.250 EOF

负载均衡 这里因为安装流程是 bootstrap 机器起来后,bootstrap 运行 machine-config 进程,安装 master 和 worker 的时候会访问 bootstrap 上的 mcahine-config 下面的 http 接口(后面有兴趣在 bootstrap 起来后去执行试试):

1 curl -sk https://api-int.openshift4.example.com:22623/config/master

安装完成后 bootstrap 会交出控制平面到 master 上(machine-config 和 kubernetes api),所以无论 master 有几个,都必须要配置负载均衡。使用工具不限,nginx,envoy 啥的均可。nginx 配置四层 mode 七层 check rs 的时候要安装插件来7层 healthz check,挺麻烦的,所以这里我使用 haproxy

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 mkdir -p /data/haproxy cd /data/haproxy cat> docker-compose.yml<<'EOF' version: '3.2' services: haproxy: image: haproxy:lts container_name: haproxy restart: always network_mode: host sysctls: - net.core.somaxconn=2000 cap_drop: - ALL cap_add: - NET_BIND_SERVICE volumes: - /data/haproxy/config/:/etc/haproxy/ - /etc/localtime:/etc/localtime:ro - /etc/hosts:/etc/hosts:ro command: ["-f", "/etc/haproxy/haproxy.cfg"] logging: driver: json-file options: max-file: '3' max-size: 100m EOF

config for haproxy 配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 mkdir -p /data/haproxy/config/ cat >/data/haproxy/config/haproxy.cfg<<'EOF' global maxconn 2000 ulimit-n 16384 log 127.0.0.1 local0 err defaults log global mode http option httplog timeout connect 5000 timeout client 50000 timeout server 50000 timeout http-request 15s timeout http-keep-alive 15s listen stats bind :9000 mode http stats enable stats uri / stats refresh 30s stats auth admin:openshift #web页面登录 monitor-uri /healthz frontend openshift-api-server bind :6443 default_backend openshift-api-server mode tcp option tcplog backend openshift-api-server balance roundrobin mode tcp option httpchk GET /healthz http-check expect string ok default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100 server bootstrap 10.225.45.223:6443 check check-ssl verify none #安装结束后删掉此行 server master1 10.225.45.251:6443 check check-ssl verify none server master2 10.225.45.252:6443 check check-ssl verify none server master3 10.225.45.222:6443 check check-ssl verify none frontend machine-config-server bind :22623 default_backend machine-config-server mode tcp option tcplog backend machine-config-server balance roundrobin mode tcp server bootstrap 10.225.45.223:22623 check #安装结束后删掉此行 server master1 10.225.45.251:22623 check server master2 10.225.45.252:22623 check server master3 10.225.45.222:22623 check frontend ingress-http bind :80 default_backend ingress-http mode tcp option tcplog backend ingress-http balance roundrobin mode tcp server master1 10.225.45.251:80 check server master2 10.225.45.252:80 check server master3 10.225.45.222:80 check frontend ingress-https bind :443 default_backend ingress-https mode tcp option tcplog backend ingress-https balance roundrobin mode tcp server master1 10.225.45.251:443 check server master2 10.225.45.252:443 check server master3 10.225.45.222:443 check EOF

因为前文使用的 quay 仓库无法更改 443 端口,这会和 haproxy 代理的 ingress-https 的 443 冲突,所以 quay 仓库单独部署。启动 haproxy

1 2 cd /data/haproxy docker-compose up -d

可以访问 http://ip:9000 查看 haproxy 的状态,basic auth 为上面 haproxy 配置文件里的admin:openshift

http file download 由于这里不是 pxe 安装,我们还得提供 http server,让 master 和 bootstrap 从 installer iso 启动后下载 raw.gz 写入到硬盘里。这里使用 nginx 提供

如果有配置 dhcp 的网络环境,这里需要起 dhcp server 和 tftp 之类的服务让 pxe 自动安装,但是我这里没这个网络条件,所以是开机器后挂载 installer iso,然后开机的时候按 tab 按键输入一堆 boot 选项参数,让它从指定 http 的地方下载和安装(也就是前面我门下载的rhcos的raw.gz文件)。这里 http 用容器 nginx 提供

创建相关目录

1 mkdir -p /data/install-nginx/{install/ignition,conf.d}

创建 docker-compose 文件和配置文件,因为宿主机有负载均衡 80 端口在运行,所以这里 nginx 使用 8080 端口

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 cd /data/install-nginx cat> docker-compose.yml<<'EOF' version: '3.4' services: nginx: image: nginx:alpine container_name: install-nginx hostname: install-nginx volumes: - /usr/share/zoneinfo/Asia/Shanghai:/etc/localtime:ro - /data/install-nginx/install:/usr/share/nginx/html - /data/install-nginx/conf.d/:/etc/nginx/conf.d/ network_mode: "host" logging: driver: json-file options: max-file: '3' max-size: 100m EOF cat> /data/install-nginx/conf.d/default.conf <<'EOF' server { listen 8080; server_name localhost; location / { root /usr/share/nginx/html; index index.html index.htm; autoindex on; } error_page 500 502 503 504 /50x.html; location = /50x.html { root /usr/share/nginx/html; } } EOF

启动install-nginx,起来后访问 http://ip:8080 看到目录就正常

rhcos iso 下载 rhcos 的 ISO , 下载链接地址 或者 这里下载 。我们下载下面俩个即可,版本号必须小于等于ocp的版本号 ,installer是给机器挂载,会在内存里运行,根据用户输入的 boot cmdline 去从 http server 下载 metal.x86_64.raw.gz 和 ignition 文件安装和配置

1 2 rhcos-4.5.6-x86_64-installer.x86_64.iso rhcos-4.5.6-x86_64-metal.x86_64.raw.gz

installer是给机器挂载从光驱启动的,raw.gz存放在 nginx的http目录下

1 2 cd /data/install-nginx/install/ wget https://mirror.openshift.com/pub/openshift-v4/dependencies/rhcos/latest/latest/rhcos-4.5.6-x86_64-metal.x86_64.raw.gz

installer 的 iso 如果是物理机或者虚机的话不要在 bmc 或者类似 vshpere 上远程挂载你自己pc上的 iso,否则会遇到 bootstrap 和 master 在 logo 的安装界面输入boot cmdline 后回车无响应的情况,建议传到远端上,相对于机器近的存储上。例如是 exsi 和 vshpere 则上传到上面的数据存储上。

安装准备 生成ssh密钥对 在安装过程中,我们会在基础节点上执行 OCP 安装调试和灾难恢复,因此必须在基础节点上配置 SSH key,ssh-agent 将会用它来执行安装程序。

基础节点上的 core 用户可以使用该私钥登录到 Master 节点。同时部署集群时,该私钥会被添加到 core 用户的 ~/.ssh/authorized_keys 列表中。

创建无密码验证的 SSH key:

1 ssh-keygen -t rsa -b 4096 -N '' -f ~/.ssh/new_rsa

后续 ssh 到 master 和 node 使用下面命令即可

1 ssh -i ~/.ssh/new_rsa core@10.225.45.251

创建安装配置文件 ocp的一些 yaml 和安装文件存放在/data/ocpinstall下,自定义 install-config.yaml 配置文件必须命名为 install-config.yaml。配置文件内容如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 mkdir /data/ocpinstall cd /data/ocpinstall cat> install-config.yaml<<'EOF' apiVersion: v1 baseDomain: example.com compute: - hyperthreading: Enabled name: worker replicas: 0 controlPlane: hyperthreading: Enabled name: master replicas: 3 metadata: name: openshift4 networking: clusterNetwork: - cidr: 10.128.0.0/14 hostPrefix: 23 networkType: OpenShiftSDN serviceNetwork: - 172.30.0.0/16 platform: none: {} fips: false pullSecret: '{"auths": ...}' sshKey: 'ssh-rsa ...' additionalTrustBundle: | -----BEGIN CERTIFICATE----- 注意这里要前面空个两格用于yaml对齐 -----END CERTIFICATE----- imageContentSources: - mirrors: - registry.openshift4.example.com/ocp4/openshift4 source: quay.io/openshift-release-dev/ocp-release - mirrors: - registry.openshift4.example.com/ocp4/openshift4 source: quay.io/openshift-release-dev/ocp-v4.0-art-dev EOF

baseDomain : 所有 Openshift 内部的 DNS 记录必须是此基础的子域,并包含集群名称。compute : 计算节点配置。这是一个数组,每一个元素必须以连字符 - 开头。hyperthreading : Enabled 表示启用同步多线程或超线程。默认启用同步多线程,可以提高机器内核的性能。如果要禁用,则控制平面和计算节点都要禁用。compute.replicas : 计算节点数量。因为我们要手动创建计算节点,所以这里要设置为 0。controlPlane.replicas : 控制平面节点数量。控制平面节点数量必须和 etcd 节点数量一致,为了实现高可用,所以设置为 3。metadata.name : 集群名称。即前面 DNS 记录中的 <cluster_name>。cidr : 定义了分配 Pod IP 的 IP 地址段,不能和物理网络重叠。hostPrefix : 分配给每个节点的子网前缀长度,等同于 k8s 的 node-max-mask。例如,如果将 hostPrefix 设置为 23,则为每一个节点分配一个给定 cidr 的 /23 子网,允许510个 Pod IP 地址,24位的话每个 node 的 pod 最多254个ip后期可能会太少了。serviceNetwork : Service IP 的地址池,只能设置一个。pullSecret : 上篇文章使用的 pull secret,可通过命令 jq . /root/pull-secret.json 来压缩成一行。sshKey : 上面创建的公钥,可通过命令 cat ~/.ssh/new_rsa.pub 查看。additionalTrustBundle : 私有镜像仓库 Quay 的信任证书,可在镜像节点上通过命令 cat /data/quay/config/ssl.cert 查看。imageContentSources : 来自前面 oc adm release mirror 的输出结果。

platform 实际上支持 gcp,aws,vshpere,bmc之类的自动创建机器,有兴趣可以去去研究下

修改好上面的文件后接着生成 Ignition 配置文件,创建后install-config.yaml会被删除,所以备份下,同时该文件实际上会被生成 configmap 的 yaml 放在manifests/cluster-config.yaml)里,在集群起来后会存在kube-system cm/cluster-config-v1里

生成部署配置文件 1 2 cd /data/ocpinstall cp install-config.yaml install-config.yaml.$(date +%Y%m%d)

创建部署清单manifests

1 2 cd /data/ocpinstall openshift-install create manifests

如果你的 worker 节点很多,不希望 master 节点上有pod被调度,修改 manifests/cluster-scheduler-02-config.yml 文件,将 mastersSchedulable 的值设为 flase,以防止 Pod 调度到控制节点。

后面的install create会转换manifest目录下所有文件,这里备份下manifest目录,有兴趣后面可以研究下

1 2 3 cp -a manifests manifests-bak # 把部署清单转换成 Ignition 配置文件 openshift-install create ignition-configs

ll manifests/目录是一堆yaml

Ignition 生成的文件如下,也有隐藏的文件,有兴趣可以去看看

1 2 3 4 5 6 7 ├── auth │ ├── kubeadmin-password │ └── kubeconfig ├── bootstrap.ign ├── master.ign ├── metadata.json └── worker.ign

把所有 ignition 文件复制到 http server 的目录里

1 2 3 4 5 6 7 \cp -a /data/ocpinstall/*.ign /data/install-nginx/install/ignition/ # 由于nginx容器用户是nginx,上面的ign文件的o是0,增加下o的r的权限 chmod o+r /data/install-nginx/install/ignition/*.ign # 改名下raw.gz的名字,以便在 boot cmdline的时候少输入url cd /data/install-nginx/install/ mv rhcos-4.*-x86_64-metal.x86_64.raw.gz rhcos.raw.gz

安装集群 先安装 bootstrap 机器,是因为 master 机器起来的时候会请求curl -sk https://api-int.openshift4.example.com:22623/config/master,如果 bootstrap 机器起来后上面的 容器和 pod 服务没就绪。master 节点安装完系统开机后会一直 retry 这个接口

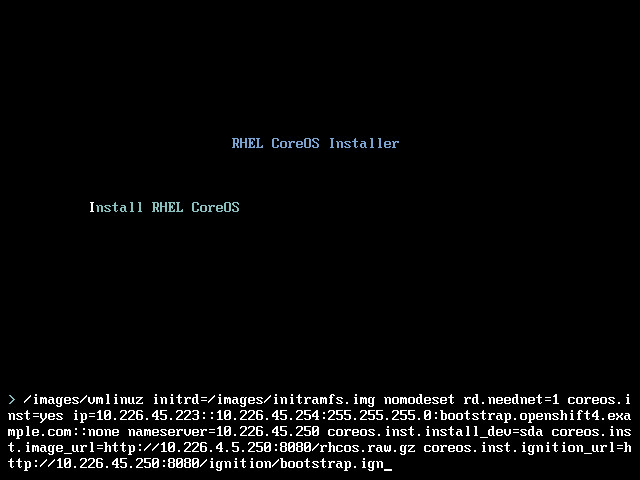

bootstrap 机器创建好后给机器的光驱挂载上前面的 installer 的 iso 文件,开机后会停留在菜单选择界面

在 RHCOS Installer 安装界面按 Tab 键进入引导参数配置选项

在默认选项 coreos.inst=yes 之后添加(由于无法拷贝粘贴,请输入仔细核对 后再回车进行):

1 ip=10.225.45.223::10.225.45.254:255.255.255.0:bootstrap.openshift4.example.com:ens192:none nameserver=10.225.45.250 coreos.inst.install_dev=sda coreos.inst.image_url=http://10.225.45.250:8080/rhcos.raw.gz coreos.inst.ignition_url=http://10.225.45.250:8080/ignition/bootstrap.ign

参数说明,参数为key=value key1=value1 每个得空格隔开

key

value

注意事项

ip$IPADDRESS::$DEFAULTGW:$NETMASK:$HOSTNAMEFQDN:$IFACE:noneIFACE在rhcos里似乎是固定的ens192,我试过了可以不写

nameserver${bastion_ip}集群的dns server,这里是bastion

coreos.inst.install_devsdarhcos 安装在哪块儿硬盘上,测试过只有一块硬盘也不会自动识别,所以不能省略,我的环境是 vsphere,硬盘是 sda,没有在 openstack 的虚机下试过(这样可能写vda?)

coreos.inst.image_urlhttp://${bastion_ip}:8080/rhcos-metal.raw.gzrhcos-metal的 url,根据自己http server的实际 url 填写

coreos.inst.ignition_urlhttp://${bastion_ip}:8080/ignition/${type}.ignignition 的 url 链接,bootstrap 则结尾是 bootstrap.ign,master 则是 master.gin

如果安装有问题会进入 emergency shell,检查网络、域名解析是否正常,如果不正常一般是以上参数输入有误,reboot 退出 shell 回到第一步重新开始。

安装成功后从基础节点通过命令 ssh -i ~/.ssh/new_rsa core@10.225.45.250 登录 bootstrap 节点,然后sudo su切换到 root 后验证:

网络配置是否符合自己的设定:

hostnameip routecat /etc/resolv.conf

验证是否成功启动 bootstrap 相应服务:

podman ps -a 查看服务是否以容器方式运行使用 ss -tulnp 查看 6443 和 22623 端口是否启用。

这里简单介绍一下 bootstrap 节点的启动流程,它启动后运行bootkube.service,systemd 的 ConditionPathExists 可以看到用文件作为防止成功后再次运行

1 2 3 4 5 6 7 8 9 10 11 12 [root@bootstrap core]# systemctl cat bootkube # /etc/systemd/system/bootkube.service [Unit] Description=Bootstrap a Kubernetes cluster Requires=crio-configure.service Wants=kubelet.service After=kubelet.service crio-configure.service ConditionPathExists=!/opt/openshift/.bootkube.done [Service] WorkingDirectory=/opt/openshift ExecStart=/usr/local/bin/bootkube.sh

查看上面的脚本内容,我们会发现它会先通过 podman 跑一些容器,然后在容器里面启动临时控制平面,这个临时控制平面是通过 CRIO 跑在容器里的,有点绕。。看下面

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 [root@bootstrap core]# podman ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 7a9a800c6819 quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:416ad8f3ddd49adae4f7db66f8a130319084604296c076c2a6d22264a5688d65 start --tear-down... 12 minutes ago Up 12 minutes ago strange_yonath 8997b001e324 quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:bf0d5fd64ab53dfdd477c90a293f3ec90379a22e8b356044082e807565699863 render --dest-dir... 12 minutes ago Exited (0) 12 minutes ago stoic_williamson 7c45d60ecd39 quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:bcd6cd1559b62e4a8031cf0e1676e25585845022d240ac3d927ea47a93469597 bootstrap --etcd-... 12 minutes ago Exited (0) 12 minutes ago lucid_heisenberg fe300339df9b quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:74c4a3c93c7fba691195dec0190a47cf194759b381d41045a52b6c86aa4169c4 render --prefix=c... 12 minutes ago Exited (0) 12 minutes ago suspicious_kepler 102c20d247d7 quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:ddb26e047d0e0d7b11bdb625bd7a941ab66f7e1ef5a5f7455d8694e7ba48989d /usr/bin/cluster-... 12 minutes ago Exited (0) 12 minutes ago optimistic_euler 1b7c759792c0 quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:ca556d4515818e3e10d2e771d986784460509146ea9dd188fb8ba6f6ac694132 /usr/bin/cluster-... 13 minutes ago Exited (0) 13 minutes ago nice_lehmann 8dab8ea23ebd quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:e4758039391099dc1b0265099474113dcd8bcce84a1c92d02c1ef760793079e6 /usr/bin/cluster-... 13 minutes ago Exited (0) 13 minutes ago clever_meitner 8bd804fb88bf quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:c93a0de1e4cb3f04e37d547c1b81a2a22c4d9d01c013374c466ed3fd0416215a /usr/bin/cluster-... 13 minutes ago Exited (0) 13 minutes ago recursing_ramanujan 11dc7e222bf9 quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:13179789b2e15ecf749f5ab51cf11756e2831bc019c02ed0659182e805e725dd /usr/bin/cluster-... 13 minutes ago Exited (0) 13 minutes ago wizardly_easley b60208539287 registry.openshift4.example.com/ocp4/openshift4@sha256:7ad540594e2a667300dd2584fe2ede2c1a0b814ee6a62f60809d87ab564f4425 render --output-d... 13 minutes ago Exited (0) 13 minutes ago goofy_bassi 428dec4624e1 quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:13179789b2e15ecf749f5ab51cf11756e2831bc019c02ed0659182e805e725dd /usr/bin/grep -oP... 13 minutes ago Exited (0) 13 minutes ago pedantic_northcutt [root@bootstrap core]# crictl pods POD ID CREATED STATE NAME NAMESPACE ATTEMPT ec6155d87b866 13 minutes ago Ready bootstrap-kube-scheduler-bootstrap.openshift4.example.com kube-system 0 6808217a146b7 13 minutes ago Ready bootstrap-kube-controller-manager-bootstrap.openshift4.example.com kube-system 0 7f1ae27edd3fe 13 minutes ago Ready bootstrap-kube-apiserver-bootstrap.openshift4.example.com kube-system 0 cc92475639870 13 minutes ago Ready cloud-credential-operator-bootstrap.openshift4.example.com openshift-cloud-credential-operator 0 08c45b635d5c1 13 minutes ago Ready bootstrap-cluster-version-operator-bootstrap.openshift4.example.com openshift-cluster-version 0 69fc31911a89f 14 minutes ago Ready bootstrap-machine-config-operator-bootstrap.openshift4.example.com default 0 7d2598ac710e6 14 minutes ago Ready etcd-bootstrap-member-bootstrap.openshift4.example.com openshift-etcd 0

如果你快速查看上面的可能一时半会儿没起来,可以通过下面命令持续观察脚本运行的日志,bootkube.sh 正常运行完(所有master也上来后)会出现bootkube.service complete

1 2 3 4 5 6 7 8 9 10 11 $ journalctl -b -f -u bootkube.service ... Jun 05 00:24:12 bootstrap.openshift4.example.com bootkube.sh[12571]: I0605 00:24:12.108179 1 waitforceo.go:67] waiting on condition EtcdRunningInCluster in etcd CR /cluster to be True. Jun 05 00:24:21 bootstrap.openshift4.example.com bootkube.sh[12571]: I0605 00:24:21.595680 1 waitforceo.go:67] waiting on condition EtcdRunningInCluster in etcd CR /cluster to be True. Jun 05 00:24:26 bootstrap.openshift4.example.com bootkube.sh[12571]: I0605 00:24:26.250214 1 waitforceo.go:67] waiting on condition EtcdRunningInCluster in etcd CR /cluster to be True. Jun 05 00:24:26 bootstrap.openshift4.example.com bootkube.sh[12571]: I0605 00:24:26.306421 1 waitforceo.go:67] waiting on condition EtcdRunningInCluster in etcd CR /cluster to be True. Jun 05 00:24:29 bootstrap.openshift4.example.com bootkube.sh[12571]: I0605 00:24:29.097072 1 waitforceo.go:64] Cluster etcd operator bootstrapped successfully Jun 05 00:24:29 bootstrap.openshift4.example.com bootkube.sh[12571]: I0605 00:24:29.097306 1 waitforceo.go:58] cluster-etcd-operator bootstrap etcd Jun 05 00:24:29 bootstrap.openshift4.example.com podman[16531]: 2020-06-05 00:24:29.120864426 +0000 UTC m=+17.965364064 container died 77971b6ca31755a89b279fab6f9c04828c4614161c2e678c7cba48348e684517 (image=quay.io/openshift-release-dev/ocp-v4.0-art-dev@sha256:9f7a02df3a5d91326d95e444e2e249f8205632ae986d6dccc7f007ec65c8af77, name=recursing_cerf) Jun 05 00:24:29 bootstrap.openshift4.example.com bootkube.sh[12571]: bootkube.service complete

master 在 bootstrap 的 crictl pods 没问题后,我们来启动 master节点,参考 bootstrap,同样配置 boot cmdline 启动,注意 ip,hostname,ign结尾的类型.ign,

1 ip=10.225.45.251::10.225.45.254:255.255.255.0:master1.openshift4.example.com:ens192:none nameserver=10.225.45.250 coreos.inst.install_dev=sda coreos.inst.image_url=http://10.225.45.250:8080/rhcos.raw.gz coreos.inst.ignition_url=http://10.225.45.250:8080/ignition/master.ign

worker 此时你worker也可以按照相关参数启动,下面是参考,我这里是后面 bootstrap 完成后重装它去启动作为worker的

1 ip=10.225.45.223::10.225.45.254:255.255.255.0:worker1.openshift4.example.com:ens192:none nameserver=10.225.45.250 coreos.inst.install_dev=sda coreos.inst.image_url=http://10.225.45.250:8080/rhcos.raw.gz coreos.inst.ignition_url=http://10.225.45.250:8080/ignition/worker.ign

一样的安装,注意ingition_url结尾是worker.ign,起来后 node 的 csr 得批准

查看挂起的证书签名请求(CSR),并确保添加到集群的每台节点都能看到具有 Pending 或 Approved 状态的客户端和服务端请求。针对 Pending 状态的 CSR 批准请求:

1 2 oc get csr oc adm certificate approve xxx

或者执行以下命令批准所有 CSR:

1 2 CSR_NAMES=`oc get csr -o json | jq -r '.items[] | select(.status == {} ) | .metadata.name' ` [ -n "$CSR_NAMES " ] && oc adm certificate approve $CSR_NAMES

后续添加 worker 重复此步骤即可

验证集群 配置 oc kubeconfig 在 bastion 节点上,我们生成部署清单的时候会产生一个kubeconfig,目录/data/ocpinstall/auth

1 2 3 ├── auth │ ├── kubeadmin-password │ └── kubeconfig

像 k8s 那样,我们需要拷贝到默认读取目录

1 2 3 mkdir ~/.kube \cp /data/ocpinstall/auth/kubeconfig ~/.kube/config oc whoami

配置下 oc 的自动补全

1 2 3 cat> /etc/bash_completion.d/oc <<'EOF' source <(oc completion bash) EOF

验证集群节点 查看集群节点是否上来

1 2 3 4 5 $ oc get node NAME STATUS ROLES AGE VERSION master1.openshift4.example.com Ready master,worker 3d14h v1.18.3+6c42de8 master2.openshift4.example.com Ready master,worker 3d14h v1.18.3+6c42de8 master3.openshift4.example.com Ready master,worker 3d14h v1.18.3+6c42de8

查看集群的 pod,如果很久之后某些 pod 还是一直 crash 的话看下面troubleshooting的,因为源码里会等待所有pod不再crash

使用 openshift-install 命令查看 bootstrap 完成否,会需要等很久,有问题看下文的 troubleshooting

1 2 3 4 5 6 7 8 9 10 11 $ openshift-install --dir =/data/ocpinstall wait-for bootstrap-complete --log-level=debug DEBUG OpenShift Installer 4.5.9 DEBUG Built from commit 0d5c871ce7d03f3d03ab4371dc39916a5415cf5c INFO Waiting up to 20m0s for the Kubernetes API at https://api.openshift4.example.com:6443... INFO API v1.18.3+6c42de8 up INFO Waiting up to 40m0s for bootstrapping to complete... DEBUG Bootstrap status: complete INFO It is now safe to remove the bootstrap resources DEBUG Time slapsed per state: DEBUG Bootstrap Complete: 24m13s INFO Time elapsed: 24m13s

这里查看源码 ,实际上 bootkube.sh 最后阶段会向集群里注入一个 cm kube-system/bootstrap存放状态。而上面的wait-for bootstrap-complete实际上就是等待这个cm创建,可以查看源码里的waitForBootstrapConfigMap方法

web console 1 2 3 4 5 6 7 8 9 $ cd /data/ocpinstall$ openshift-install wait-for install-complete INFO Waiting up to 30m0s for the cluster at https://api.openshift4.example.com:6443 to initialize... INFO Waiting up to 10m0s for the openshift-console route to be created... INFO Install complete! INFO To access the cluster as the system:admin user when using 'oc', run 'export KUBECONFIG=/data/ocpinstall/auth/kubeconfig' INFO Access the OpenShift web-console here: https://console-openshift-console.apps.openshift4.example.com INFO Login to the console with user: "kubeadmin", and password: "xxxx-xxxx-zKu8J-W7QRi" INFO Time elapsed: 1s

注意最后提示访问 Web Console 的网址及用户密码。如果密码忘了也没关系,可以查看文件 /data/ocpinstall/auth/kubeadmin-password 来获得密码。

本地访问 Web Console,因为集群里已经有 ingress controller 了,并且我们的入口是 10.225.45.250 ,所以本地需要添加下列 hosts:

1 2 10.225.45.250 console-openshift-console.apps.openshift4.example.com 10.225.45.250 oauth-openshift.apps.openshift4.example.com

浏览器访问 https://console-openshift-console.apps.openshift4.example.com,输入上面输出的用户名(kubeadm)和密码登录。首次登录后顶部会提示:

1 You are logged in as a temporary administrative user. Update the Cluster OAuth configuration to allow others to log in .

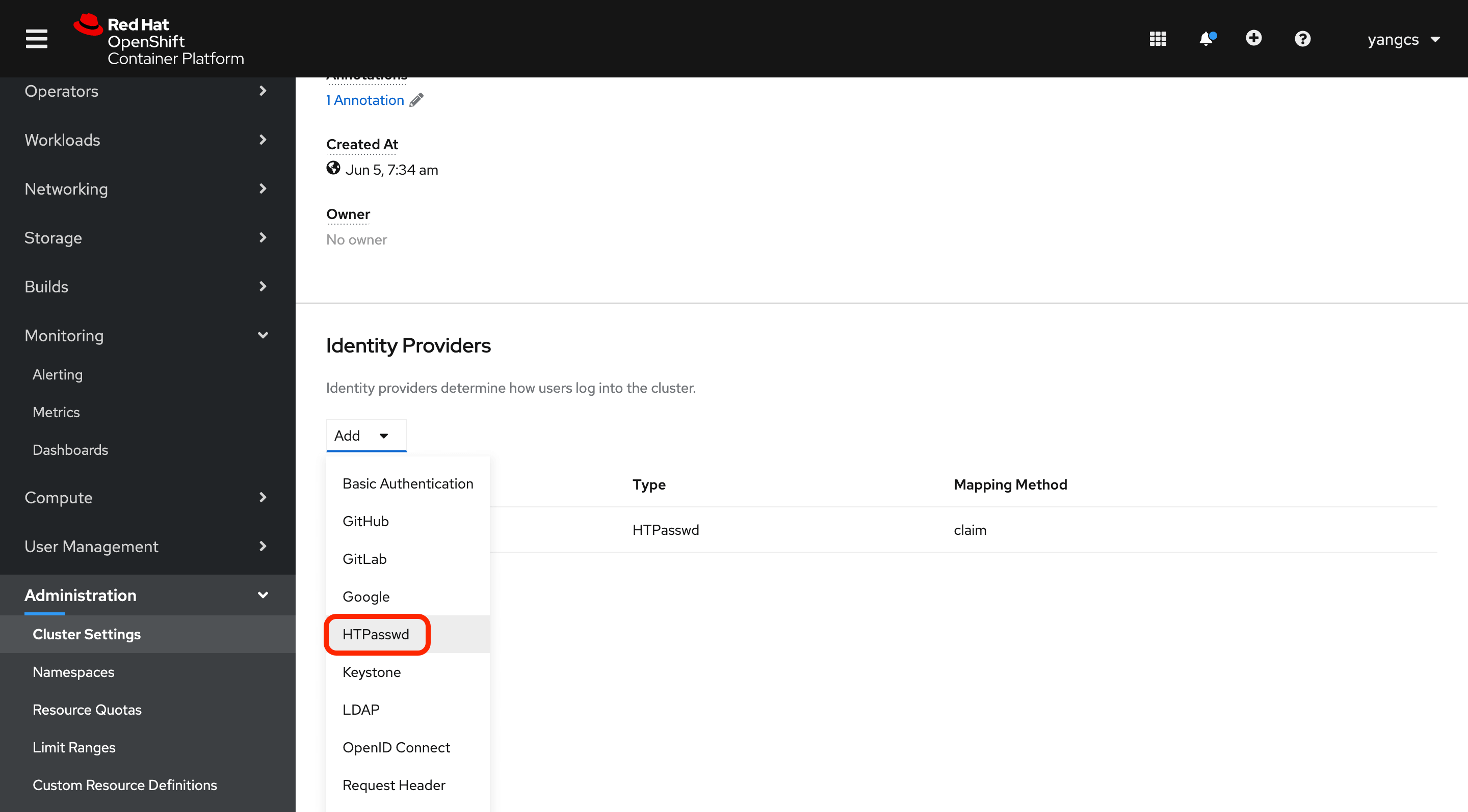

我们可以通过 htpasswd 自定义管理员账号,步骤如下:

1 cd /data/ocpinstall/auth && htpasswd -c -B -b users.htpasswd admin xxxxx

2 将 users.htpasswd 文件下载到本地。

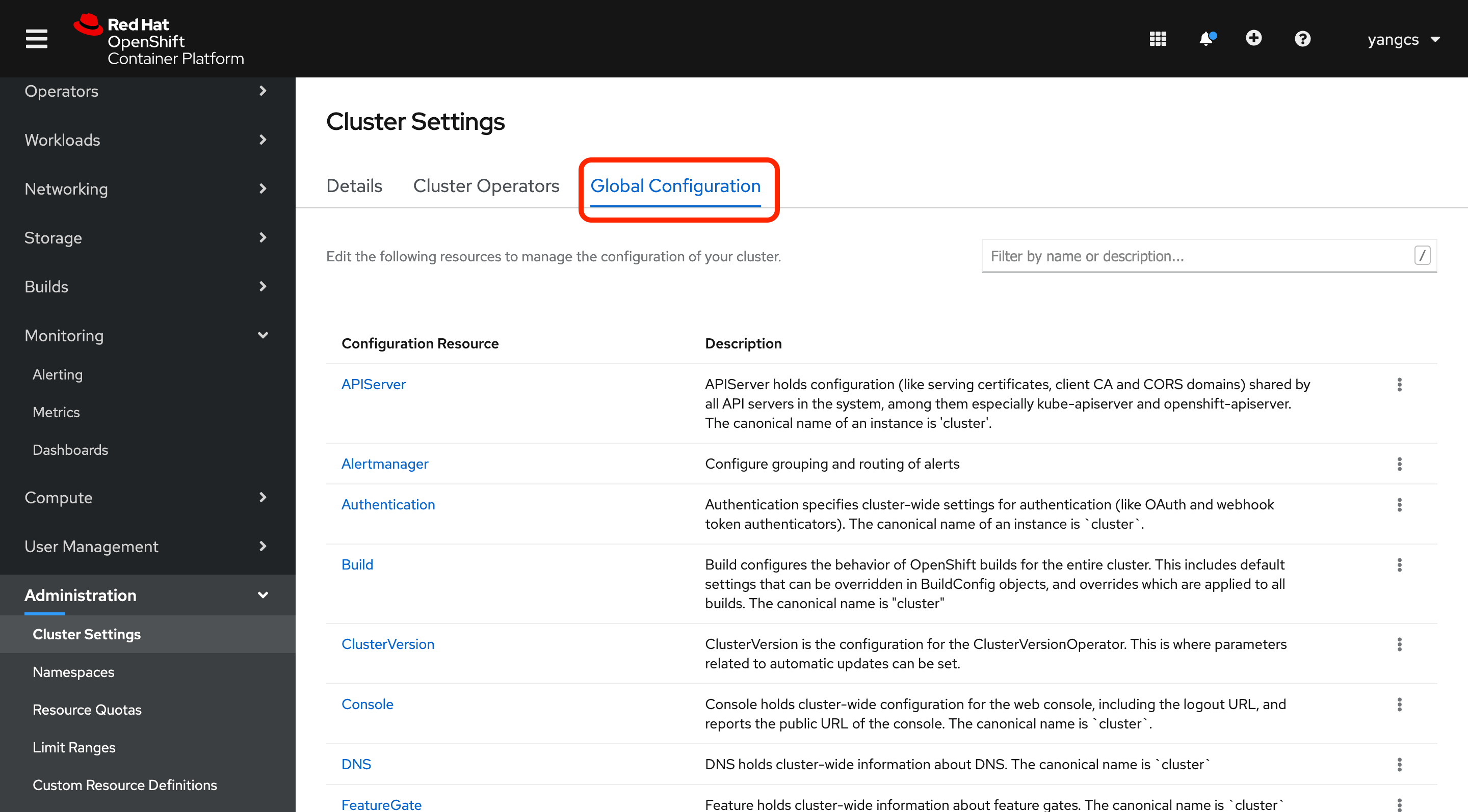

3 在 Web Console 页面打开 Global Configuration:

然后找到 OAuth,点击进入,然后添加 HTPasswd 类型的 Identity Providers,并上传 users.htpasswd 文件。

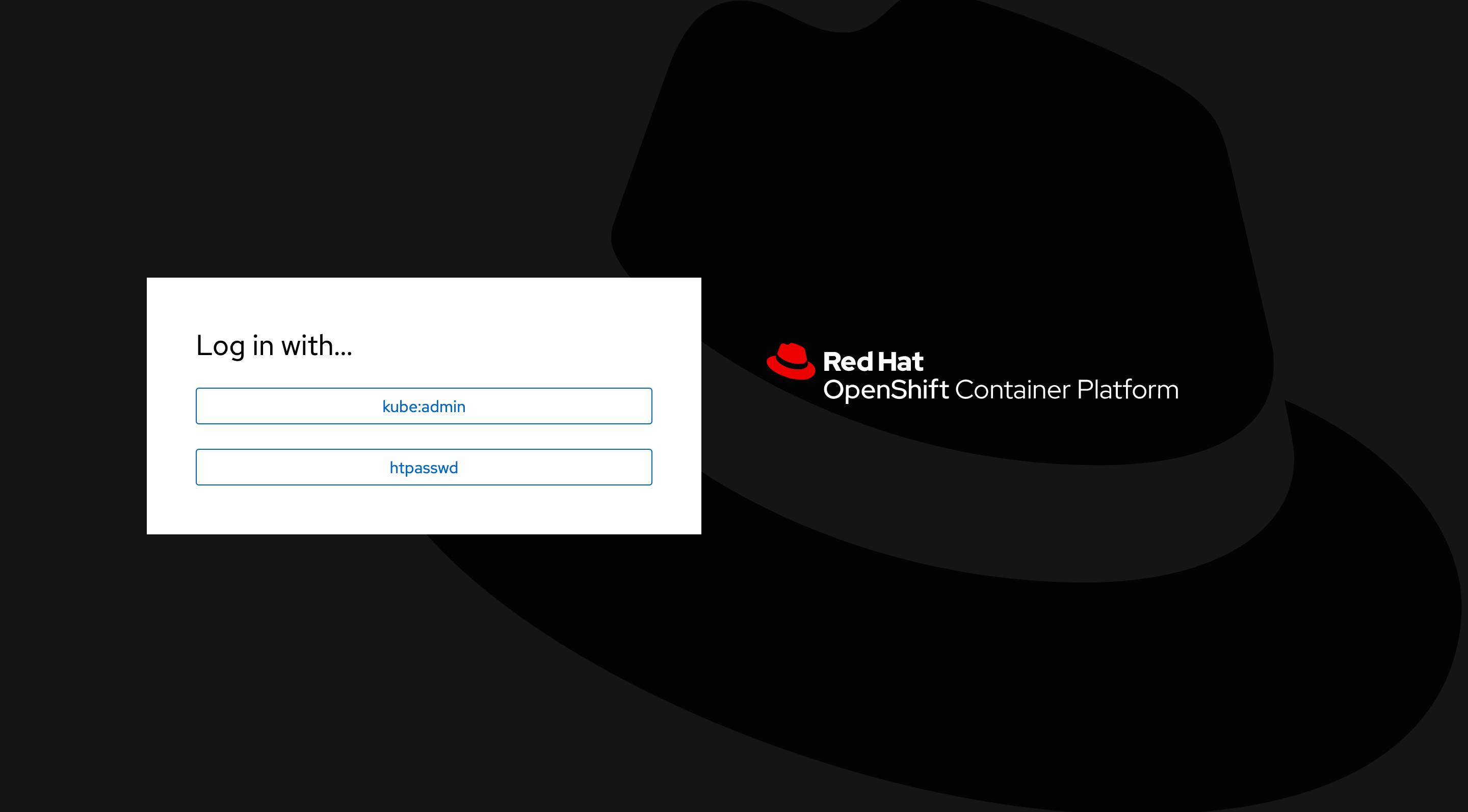

4 退出当前用户,要注意退出到如下界面:

选择 htpasswd,然后输入之前创建的用户名密码登录。

如果退出后出现的就是用户密码输入窗口,实际还是 kube:admin 的校验,如果未出现如上提示,可以手动输入 Web Console 地址来自动跳转。

5 登录后貌似能看到 Administrator 菜单项,但访问如 OAuth Details 仍然提示:

1 oauths.config.openshift.io "cluster" is forbidden: User "admin" cannot get resource "oauths" in API group "config.openshift.io" at the cluster scope

因此需要授予集群管理员权限:

1 oc adm policy add-cluster-role-to-user cluster-admin admin

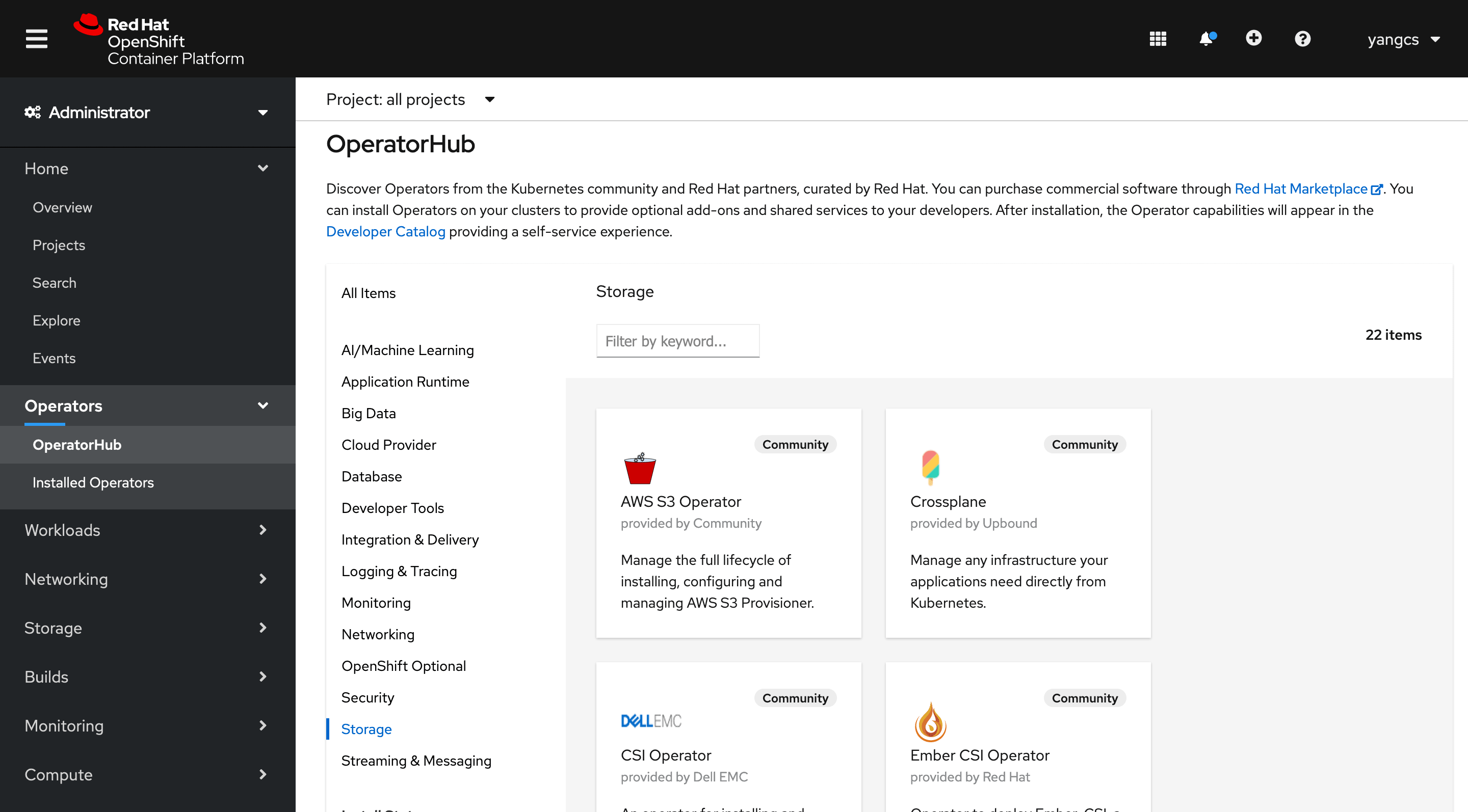

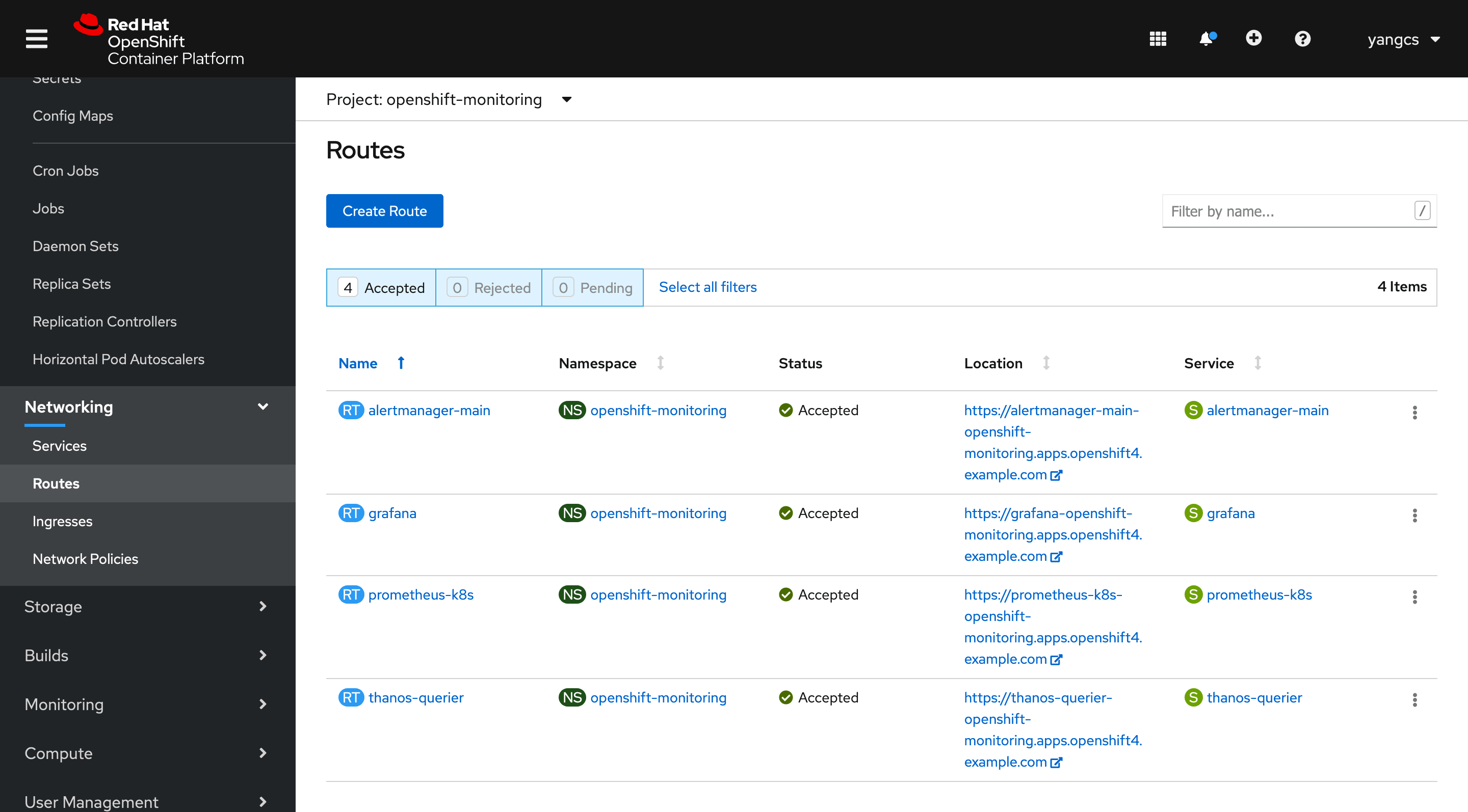

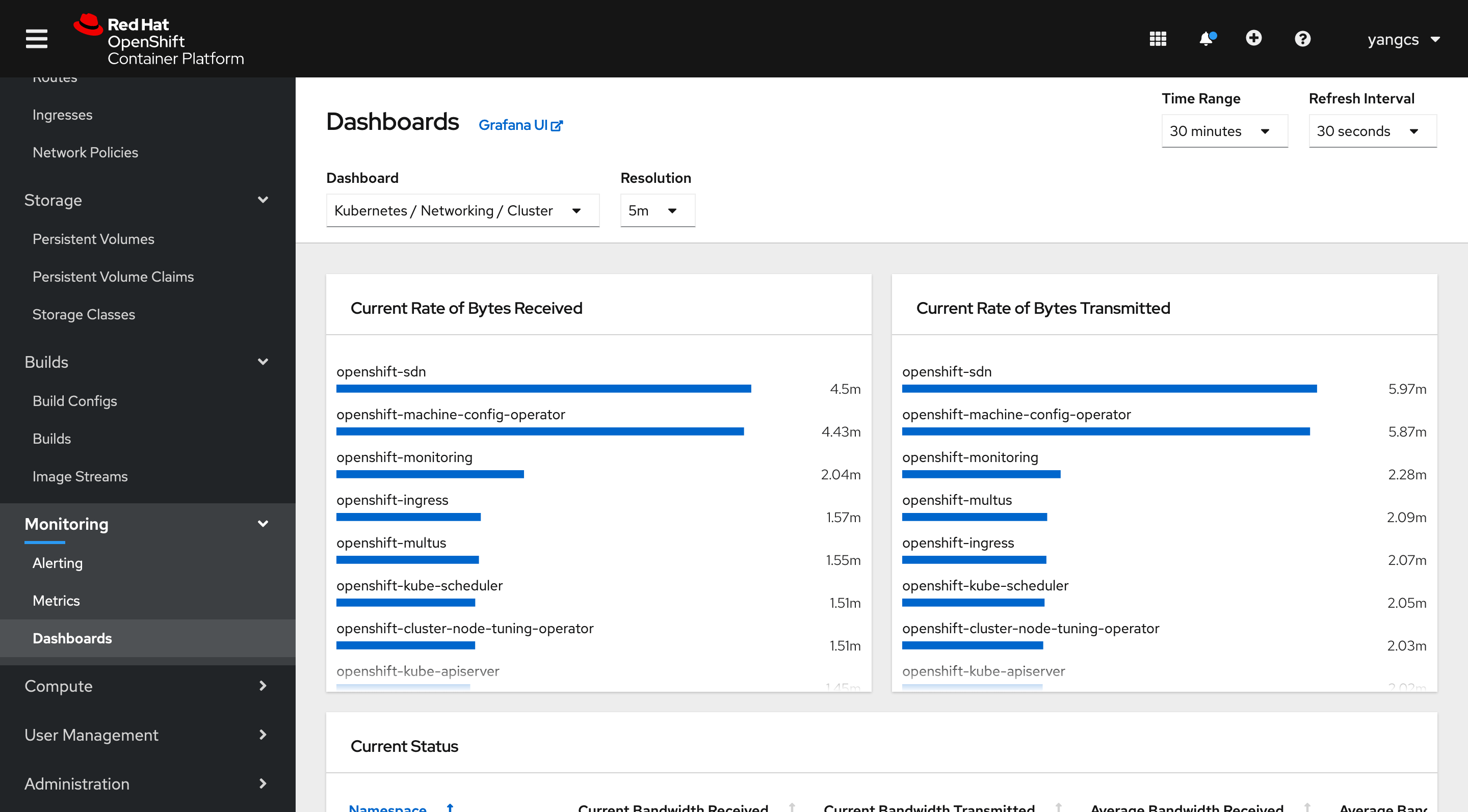

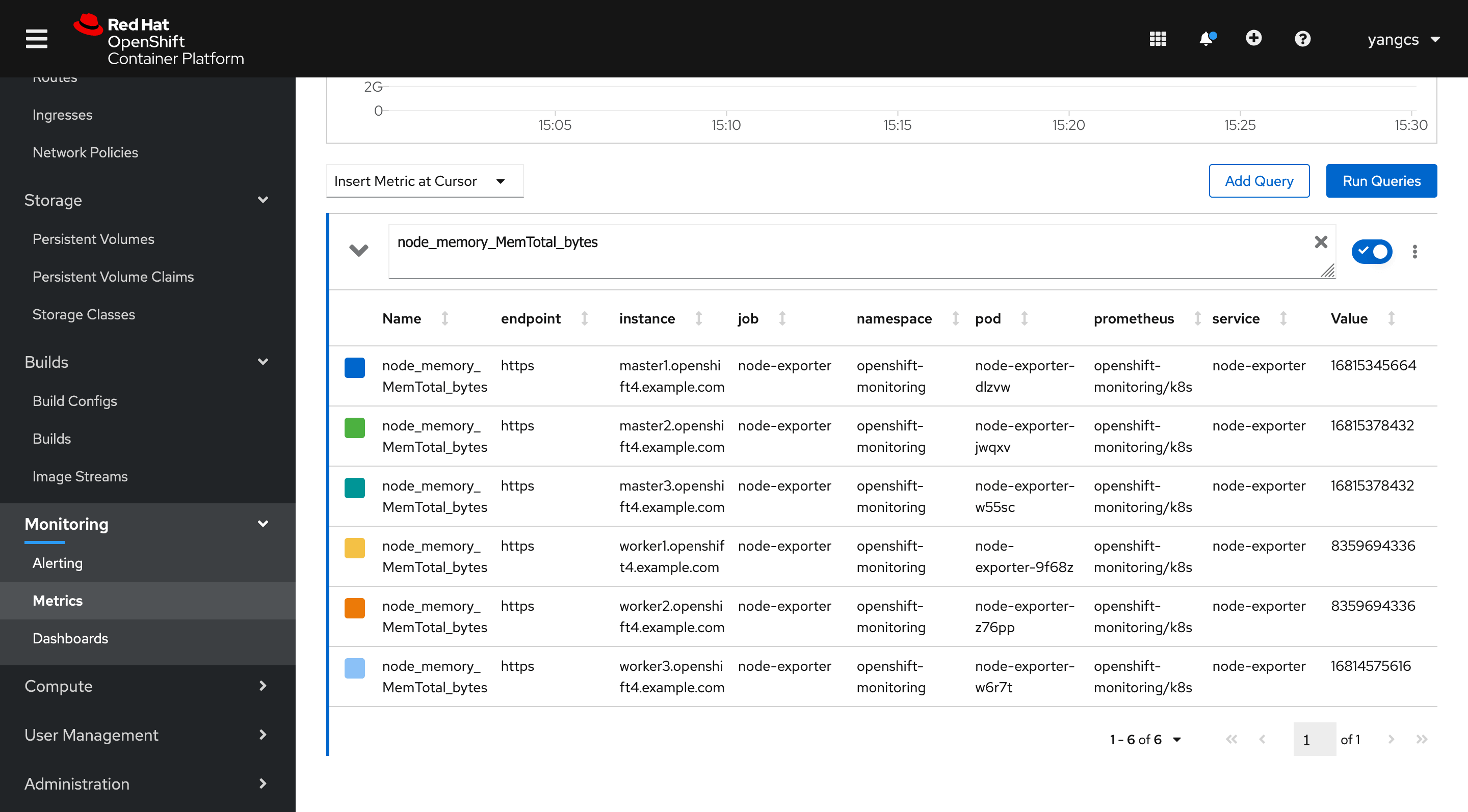

Web Console 部分截图:

如果想删除默认账号,可以执行以下命令:

1 oc -n kube-system delete secrets kubeadmin

集群善后的一些配置 ingress controller github 地址为cluster-ingress-operator get deploy router-default -o yaml查看,可能后续修复了。根据推测排查到是 operator 部署的,

1 2 3 [root@bastion ~]# oc get ingresscontrollers -A NAMESPACE NAME AGE openshift-ingress-operator default 3d15h

但是看了下属性oc explain ingresscontrollers.spec.endpointPublishingStrategy.hostNetwork发现不支持配置互斥和dnsPolicy,这会导致无法解析集群内的svc

1 2 3 4 5 6 7 8 9 10 11 12 13 [root@master2 ~]# crictl pods --name router-default-fb744fb7f-hmmn5 -q 0a0c7cc6d1ad6815f7613fd758c5329c4265ddb6607f568b69e30fdafdfc0a52 [root@master2 ~]# crictl ps --pod=0a0c7cc6d1ad6815f7613fd758c5329c4265ddb6607f568b69e30fdafdfc0a52 CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID b04c17fb1c58d dd7aaceb9081f88c9ba418708f32a66f5de4e527a00c7f6ede50d55c93eb04ed 3 days ago Running router 1 0a0c7cc6d1ad6 [root@master2 ~]# crictl exec b04 cat /etc/resolv.conf search openshift4.example.com nameserver 10.225.45.250 [root@master2 ~]# crictl exec b04 curl kubernetes.default.svc.cluster.local % Total % Received % Xferd Average Speed Time Time Time Current Dload Upload Total Spent Left Speed 0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0curl: (6) Could not resolve host: kubernetes.default.svc.cluster.local; Unknown error FATA[0000] execing command in container failed: command terminated with exit code 6

这个我已经提issue 了

调整下 ingress controller 的数量,因为 deployment 由 crd 纳管,最好不要直接去操作 deploy 的属性,同时这里我们是把 ingress controller 部署在 master 上,所以得用 nodeSelector 固定,先打 label

1 2 3 4 5 6 7 8 9 10 11 12 13 oc label node master1.openshift4.example.com ingressControllerDeploy=true oc label node master2.openshift4.example.com ingressControllerDeploy=true oc label node master3.openshift4.example.com ingressControllerDeploy=true oc -n openshift-ingress-operator patch ingresscontroller default --type='merge' -p "$(cat <<- EOF spec: replicas: 3 nodePlacement: nodeSelector: matchLabels: ingressControllerDeploy: "true" EOF )"

移除haproxy 里 bootstrap 的配置 自行操作,移除负载均衡里的无用配置

Local Storage Operator 参考 Installing the Local Storage Operator

1 2 oc oc new-project local-storage

clusteroperator 查看 clusteroperator,如果有个别有问题,可以等worker部署完了慢慢排查